Security Frontiers 2025 didn’t feel like your typical cybersecurity event. Short, focused demonstrations from practitioners—engineers, researchers, red teamers, and AI builders, logging in from across the world to share what they’ve been working on, what’s working, and what still needs solving for AI in cybersecurity.

What started as a virtual gathering quickly turned into something more: a shared space for experimentation, candid discussion, and hands-on demos. The goal was to show what that future already looks like, in code, in context, and in production.

A few clear themes stood out across panels, live demos, and side chats. From how AI is reshaping the SOC to the quiet rise of AI-powered threats, these conversations revealed more than just progress; they mapped out where we’re headed next.

We’ve put the presentations together in this YouTube playlist. Below are seven takeaways that defined Security Frontiers 2025.

Takeaway 1: From Ideas to Implementation

If 2024 was the year of questions—Can we trust AI in security? What should we automate? Then 2025 is the year of answers. It was clear at Security Frontiers that the experimentation phase is giving way to real deployment.

Caleb Sima captured it best when he said the shift isn’t about belief anymore: “The question is, no longer can this work—it’s how fast we can get it running in production.” Across the board, teams are moving from proof-of-concept to pipelines. Demos weren’t just theoretical—they showed working systems already triaging alerts, analyzing threats, and producing real, usable outputs.

Daniel Mesler framed this moment as the “build” phase of the AI adoption curve. Teams aren’t waiting for perfect tools or fully autonomous agents—they’re building lightweight, context-aware solutions that slot into real-world workflows and start delivering value immediately.

For Edward Wu, this shift is playing out on the front lines. From inside SOCs, he sees the change firsthand: organizations aren’t asking if AI can help with investigations. They’re asking when to deploy and how fast they can scale.

Takeaway 2: AI Is Reshaping Security Operations

One of the clearest signals from Security Frontiers 2025: AI isn’t just supporting Security teams—it’s starting to do the work.

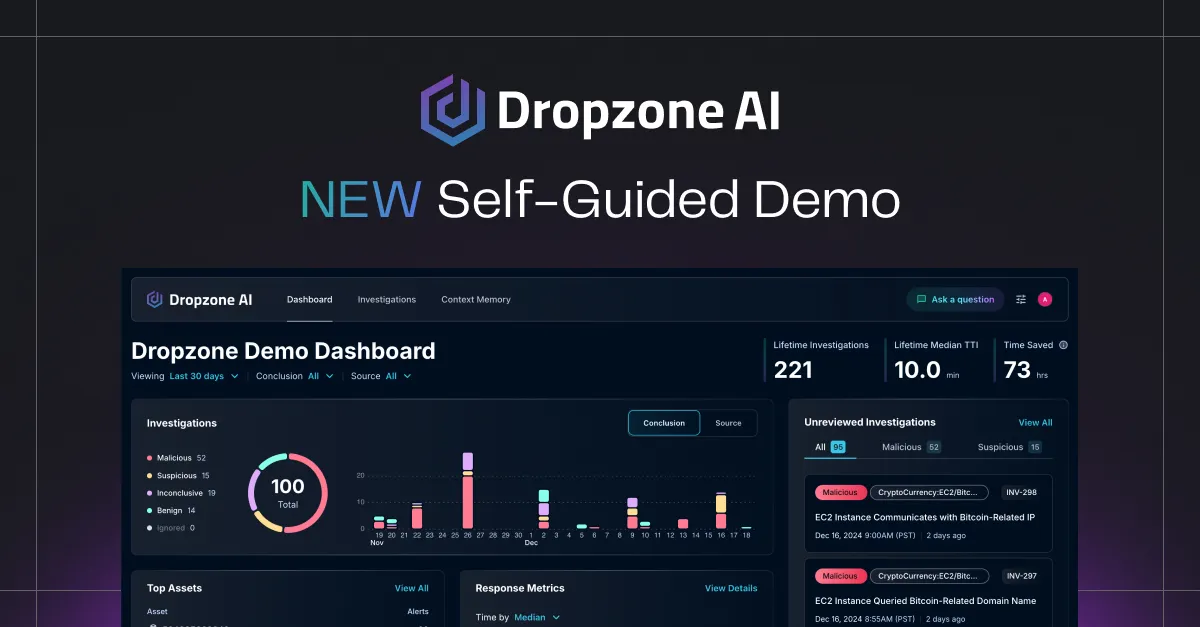

The most mature tools on display weren’t focused on dashboards or alerts. They were focused on action. AI systems are now handling full Tier 1 investigations, pulling logs, enriching context, forming conclusions, and delivering structured, decision-ready reports. In many cases, no human needed to touch the alert until it was time to make a final call.

Edward Wu shared how this shift is already happening in practice. At Dropzone, their AI SOC analyst picks up every alert the moment it arrives—no queue, no waiting. It investigates, writes the report, and hands it off to a human for review, often before a traditional workflow acknowledges the alert.

Caleb Sima raised an important challenge to the industry: if we don’t see more of these tools in production by year’s end, we may have overestimated what AI is ready to handle. But for now, what’s working is clear: automation that eliminates triage bottlenecks cuts false positives and gives analysts their time back.

Takeaway 3: AI Still Needs Human Oversight

Even as AI takes on more investigative load, human oversight remains a critical piece of the equation. Across sessions, speakers reinforced a common truth: the best outcomes come from collaboration, not handoff.

From phishing investigations to SOC triage, AI is proving remarkably effective at pattern recognition, correlation, and summarization. But when it comes to nuance—understanding the business context, weighing gray-area risks, or spotting social engineering tactics—humans still play a necessary role.

Tools like PII Detective and agent-based systems can handle most repetitive tasks, but they still need a human to review outputs, confirm conclusions, and catch edge cases. And that’s not a technology failure—it’s part of building trust.

As Edward Wu pointed out, the goal isn’t full autonomy—it’s scoped autonomy. Systems that can reason and execute within clearly defined boundaries already deliver value. But turning them loose without oversight risks missing the forest for the trees.

And as LLMs get better, the threshold for when we can trust automation will keep rising. But for now, that final layer of human judgment keeps AI grounded—and security effective.

Takeaway 4: Prompt Engineering and Context Are the New Code

One of the most striking shifts at Security Frontiers 2025 was the growing consensus that LLMs aren’t just tools but systems that require careful design. The days of throwing raw prompts at a model and hoping for magic are over. What matters now is structure, memory, and context.

The most successful demos and tools shared a common thread: they didn’t just generate answers—they showed their reasoning. Agents were built with multiple memory layers—hypotheses, evidence, and long-term recall—allowing them to carry context across tasks and make informed decisions.

Edward Wu broke this down during the panel: agents need memory, not just inputs. Without it, they’re guessing. With it, they start to reason. This architectural shift means prompt design isn’t just about clarity—it’s about giving the model a working memory and a purpose.

Panelists agreed that prompt engineering is more than a skill in this new world—it’s infrastructure. It defines what your agent can do, how it behaves, and whether it can be trusted. And as LLMs grow more capable, context will be what separates useful from unreliable.

Takeaway 5: AI-Powered Attacks Are Already Here—We Just Can’t See Them Clearly

Unlike the flashy headlines we often expect from new attack techniques, AI-driven threats are creeping in quietly. That was the unsettling takeaway echoed by panelists at Security Frontiers.

Daniel Mesler emphasized that we may already be under siege from AI-augmented attacks—we’re just not detecting them as something new. These campaigns don’t need to look novel to be effective. Instead, they’re often faster, more personalized, and more scalable versions of familiar tactics like phishing, credential stuffing, or lateral movement.

Caleb Sima pointed out that attackers may already use AI to fuzz applications, identify vulnerabilities, and fine-tune payloads—not with wild new malware but with more efficient exploitation of existing weaknesses.

This evolution puts pressure on defenders to stop looking for novelty and start looking for signals of scale and sophistication. Are phishing emails suddenly sharper and more targeted? Are brute-force attempts getting oddly selective? AI might be behind the curtain.

Takeaway 6: Build Tools for AI to Use, Not Just Humans

A subtle but powerful shift emerged across multiple sessions: the next wave of security tools won’t be built for humans but for agents.

Josh Larson’s demo of Reaper, a lightweight fuzzing tool designed specifically for AI agents to operate autonomously, made this point crystal clear. It wasn’t built with a traditional UI or manual workflows in mind. It was built with an API-first mindset, assuming the operator wouldn’t be a person—but an AI.

This approach reflects a deeper change in how security infrastructure is evolving. Instead of designing tools with user interfaces and dashboards at the center, developers are building modular, callable systems that agents can invoke, interrogate, and act through. Think machine-to-machine, not analyst-to-tool.

Protocols like Anthropic’s Model Context Protocol (MCP) push this even further, enabling agents to interact with tools in structured, predictable ways—no playbooks or hardcoding required.

The message was clear: if you’re building security tools today, build for agents. Because increasingly, those agents aren’t just assistants. They’re the ones driving the investigation.

Takeaway 7: Misuse is Real—and Accessible

One of the most unsettling moments of Security Frontiers 2025 came from Jeff Sims’ demo of Dark Watch, an autonomous surveillance agent powered by a large language model. What made it chilling wasn’t its complexity—it was how simple it was to build.

With just a graph database, public social media data, and an LLM agent, the system could crawl, correlate, and flag individuals based on ideological sentiment. It was a live demonstration of how non-technical users could assemble and deploy AI-driven surveillance systems using freely available tools. And it drove home an important point: AI doesn’t need access to your systems to be dangerous—it just needs access to your data.

The implications go beyond traditional cybersecurity. The potential for abuse in surveillance, disinformation, and social targeting is real—and rising. As AI tools become more accessible, the barrier to creating weaponized systems is dropping fast.

The panel didn’t offer easy answers. But it left a clear call to action: ethical boundaries and governance can’t be an afterthought. If this kind of power is possible without code, the conversation must expand beyond threat detection and include threat prevention at the design level.

A Quiet Revolution, Built In Public

Security Frontiers 2025 didn’t deliver big product launches or sweeping predictions. Instead, it offered something more valuable: real insight from people doing the work, building with AI in the open, and learning as they go.

What stood out was the honesty. The panelists and presenters weren’t claiming that AI would solve everything. They showed how it’s already solving some things, and what still needs human hands on the wheel. They talked about deployment, trust, limitations, architecture, misuse, and ethics with clarity and candor.

That’s what made this event feel different. It was about the present—messy, promising, and already in motion.

So here’s the ask: Don't just watch from the sidelines if you care about this space. Watch the panel. Share it with your team. Start the conversation. And if you’re building, testing, or even just curious—bring your ideas to the table. This is a community that rewards showing your work.

The quiet revolution is already underway. It’s not hype. It’s happening. And you’re invited.

.png)