Security Frontiers 2025 opened with the kind of panel you hope for at a conference: Seasoned security leaders share insights and honest perspectives about bringing AI into cybersecurity without the fluff.

The discussion featured Edward Wu, CEO of Dropzone AI, whose team is actively deploying AI agents inside modern SOCs; Caleb Sima, former Chief Security Officer at Robinhood, veteran of multiple security startups, and Founder of Whiterabbit, a cybersecurity venture studio; and Daniel Miessler, founder of Unsupervised Learning and a cybersecurity strategist with decades of experience across Apple, HP, and beyond.

Together, they kicked off the event with a clear message: AI in security is no longer theoretical, and the stakes are real. Their conversation set the tone for the rest of the conference, moving past hype and into hard questions: What’s working? What isn’t? What are attackers actually doing with AI? And how do we build responsibly in an environment that’s changing by the month?

Whether you missed the live session or want to revisit the highlights, the full panel recording is below. It’s worth watching, especially if you care about building what’s next.

#1 - The Shift from Possibility to Practice

One of the earliest themes to emerge from the panel was a shared sense that the industry has entered a new phase. As Caleb Sima put it, 2024 was full of ideas, prototypes, and proof-of-concepts. But in 2025, the focus has shifted. It’s about turning those concepts into real systems that can hold up in production.

Caleb noted a surge in AI-driven security startups. There’s no shortage of teams building interesting tools, but widespread adoption is still taking shape. Many solutions are promising but not yet deeply embedded in day-to-day operations.

Daniel Miessler framed this as the “build” phase of the adoption curve when energy shifts from theory to implementation. The question isn’t what’s possible anymore. It’s what’s reliable, scalable, and ready to use.

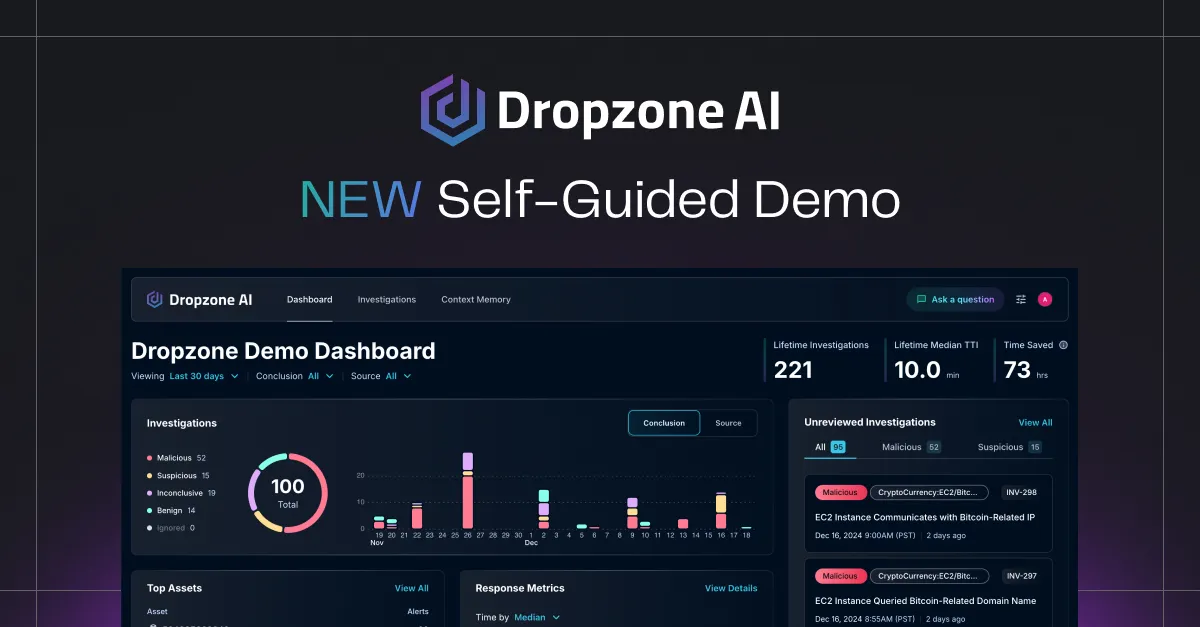

Edward Wu reinforced this shift. From his view in active SOC deployments, the conversation has moved from “Can this work?” to “How fast can we bring this in?” AI is no longer a novelty. It’s becoming a critical part of the modern security toolkit.

#2 - How AI is Actually Helping Security Teams Today

While much of the industry is still catching up to the promise of AI, the panelists pointed to several areas where it’s already making a real impact. The most successful tools aren’t trying to reinvent the entire security stack. They’re filling the gaps that have long-strained teams: alert triage, incident investigation, and rapid context gathering.

These use cases aren’t flashy, but they’re critical. Security teams are often overwhelmed by the volume of alerts and the time it takes to sift through false positives to find alerts that matter. AI is starting to step in here, not to replace analysts, but to give them back hours they used to spend chasing false positives or digging through logs.

Edward Wu highlighted Dropzone AI’s approach as a prime example. Instead of relying on rigid, pre-built playbooks, their AI agents autonomously investigate alerts, gather relevant context, and produce structured, decision-ready reports. It’s not just automating tasks; it automates investigative reasoning, the work that previously required valuable human brain cycles.

Caleb Sima added a note of cautious optimism. He predicted that by the end of the year, we’ll either start to see meaningful, widely adopted production tools or we’ll have to admit the tech was overhyped. For now, though, the early signs are promising, especially in teams willing to integrate AI, where it delivers the most value.

#3 - Attackers and AI: The Quiet Evolution

One of the more sobering threads in the panel conversation came from a simple but unsettling observation: AI-powered attacks may already be here. We just aren’t seeing them clearly. Unlike the arrival of a new malware strain or a zero-day with a name, AI in the hands of threat actors doesn’t announce itself. It blends in.

Daniel Miessler cautioned against expecting some bold, headline-grabbing shift in how attacks look. Instead, he encouraged listeners to focus on changes in volume and quality. If phishing campaigns suddenly become sharper, more personalized, or significantly more frequent, AI might be the force behind the curtain. The technology doesn’t need to reinvent attacks. It only needs to make them faster, cheaper, and harder to detect.

Caleb Sima took it a step further, suggesting that attackers may already be quietly using AI to discover vulnerabilities at scale. With tools like LLMs capable of analyzing binary code or scanning for weak configurations, the reconnaissance phase of an attack could become significantly more powerful without changing the playbook itself.

The panel also touched on enhancements in fuzzing, lateral movement, and social engineering, all areas where AI could boost efficiency without triggering traditional alarms. It’s not just about the threats we can see. It’s about the ones we can’t. And that’s what makes this shift so challenging to defend against.

#4 - Agents, Context, and the Power of Memory

As the conversation turned toward what’s next, the panelists agreed: the real breakthrough in AI for security isn’t just speed; it’s context. The tools making the biggest leap forward are the ones that don’t just react but remember. They don’t just process data. They reason through it.

Edward Wu offered a closer look into how this works inside agent-based systems. At Dropzone AI, for example, the agentic system is built with distinct functions: one for forming and validating hypotheses, another for collecting evidence for findings, and a third for long-term recall of details unique to the environment. This architecture allows the system to do more than respond to a prompt. It lets the agent autonomously build a case, revisit previous decisions, and adapt based on what it’s already learned.

The panel noted that this is where emergent behavior begins to appear. When AI tools are given memory and reasoning capabilities, they start to move beyond scripted logic. They begin to exhibit something closer to judgment. Not just pattern recognition but prioritization. Not just execution, but strategy.

#5 - Risks, Guardrails, and What Still Needs Work

For all the excitement around what AI can do, the panelists were clear-eyed about its limitations. Full autonomy isn’t here yet, but it might be exactly how it should be. The current generation of tools, while impressive, still requires oversight. In security, where the cost of a wrong decision can be high, human oversight matters.

Each speaker emphasized the continued importance of human-in-the-loop systems. Whether you’re triaging alerts, investigating incidents, or tagging sensitive data, AI works best today as a collaborator, not a replacement. False positives remain a real concern. So do hallucinations, where the model delivers a confident but incorrect answer. GenAI is powerful but not always predictable without guardrails.

The discussion also touched on the growing need for trust and transparency. As AI systems become more embedded in security workflows, teams need ways to evaluate outputs, measure consistency, and ensure decisions can be traced and explained. Without that, even a technically sound model can struggle to gain adoption.

Regulatory pressure is adding another layer of urgency. As frameworks evolve around AI safety and data protection, organizations will need clearer methods for auditing how these systems make decisions and how those decisions are validated.

#6 - Looking Ahead: What Will 2026 Look Like?

As the conversation wound down, the panelists turned their focus to what the next year might hold—and how quickly the landscape is likely to change. The consensus? The real transformation will feel slow until suddenly, it’s not. AI in cybersecurity is following a familiar curve: quiet experimentation, steady refinement, and then, almost overnight, widespread adoption.

Daniel Miessler predicted we’ll soon see AI teammates embedded within security teams, not just as tools but as intelligent collaborators capable of handling complex tasks alongside human analysts. Edward Wu added that these systems won’t be general-purpose intelligences but will have scoped autonomy, allowing them to operate independently within well-defined boundaries.

For Caleb Sima, the real test of success isn’t how flashy these tools are. It’s whether they can disappear into the workflow. The most impactful AI, he argued, will be the kind you barely notice. It’ll handle work that used to eat up hours, surface decisions faster, and reduce noise without adding complexity.

The conversation offers a reality check and a road map for anyone exploring the intersection of AI and cybersecurity. If you didn’t catch the session live, it’s worth watching and sharing with your team. The future may still be unfolding, but it’s clear: the people shaping it are already at work.