Key Takeaways

- AI efficiency in SecOps should be defined by measurable impact. Don’t use AI for its own sake; real value is reducing alert fatigue, investigation time, and manual workload.

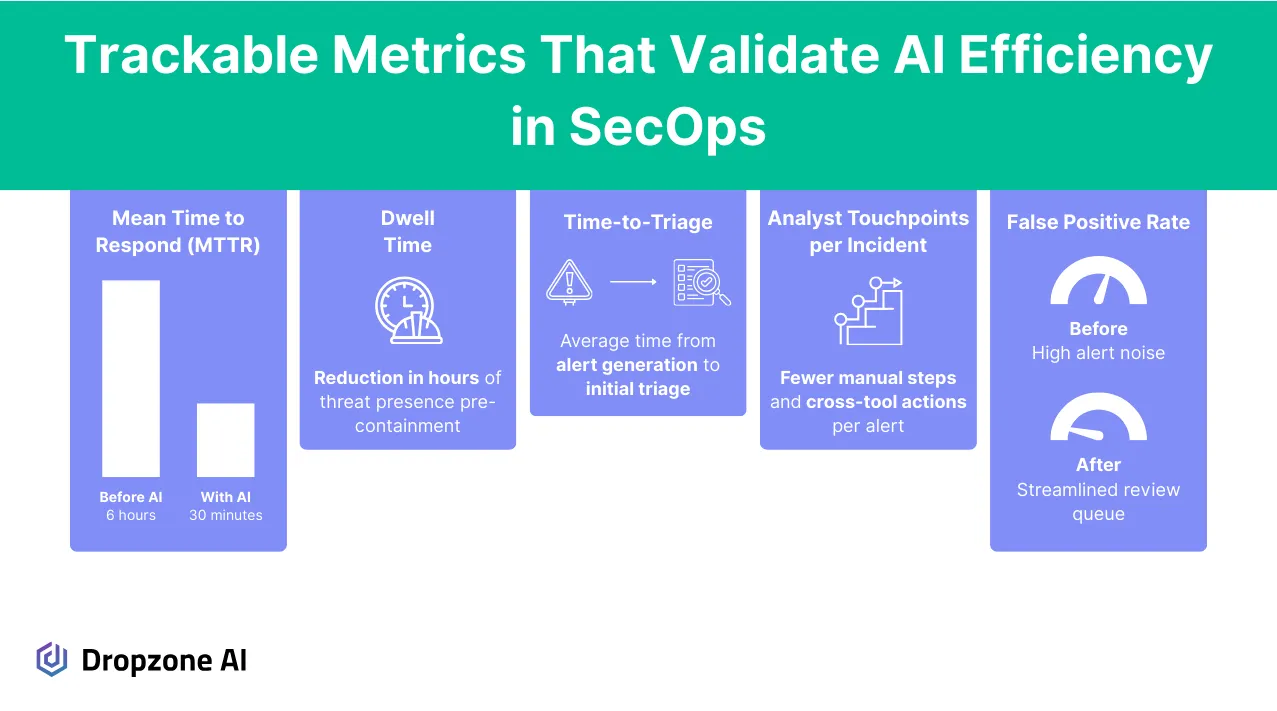

- Success depends on tracking the right operational metrics. Key indicators like dwell time, MTTR, and analyst touchpoints help teams understand whether AI improves performance.

- Clear baselines and focused use cases are critical for meaningful results. Teams should start with defined goals and apply AI to high-friction areas like triage or incident response to see tangible improvements.

Quick Answer

AI efficiency in SecOps means measurably reducing investigation time, alert fatigue, and manual workload through focused automation. Start by establishing baselines for key metrics like MTTR and dwell time, then deploy AI tools like Dropzone AI that can demonstrate 5x improvements in incident response speed while maintaining investigation quality.

Introduction

You are being handed AI budgets with little more than a broad directive to “make things more efficient.” That kind of mandate sounds promising, but wasting time or investing in the wrong areas is easy without a clear definition of success. The 2025 SANS SOC Survey found that while nearly 40% of survey respondents use AI/ML tools in the SOC, they aren’t part of defined workflows.

.webp)

Most teams aren’t struggling to adopt AI; they’re trying to figure out where it makes a difference. This article outlines how to approach that challenge with focus, clarity, and real-world examples.

What Does AI Efficiency in SecOps Mean?

When people talk about using AI in security operations, the conversation often jumps straight to language models or automation tools. But efficiency isn’t defined by which tool you use; it’s about what improves. If an AI system doesn't help reduce the noise, cut down the investigation cycle, or make your team’s workload lighter, then it’s not delivering meaningful value.

Most teams are under pressure to do more with less. That might mean shortening dwell time, getting alerts in front of the right analyst faster, or scaling triage without burning out staff. Efficiency in this context means removing repetitive, manual steps and helping analysts focus on actual decision points. It's the operational improvements, not just technical upgrades, that matter.

There’s a lot of confusion around what counts as “AI efficiency.” Automating for the sake of automation doesn’t help. Repackaged chatbots, broad language models that don’t tie into your context, or tools that generate more questions than answers often add overhead instead of reducing it.

Efficiency should be observable. You should be able to point to a specific part of your incident response or alert handling process and say, “This now takes half the time it used to.” Without that change, it’s just another tool in the stack that might end up as shelfware.

Use Cases for Dropzone’s AI-Driven Efficiency

In real-world purple team exercises for one client, Dropzone helped cut incident response times by a factor of five. These weren’t staged scenarios or demo environments but structured tests with measurable before-and-after performance data. The difference came from how the platform handled alert flow and reduced the time analysts spent collecting context manually.

One of the biggest improvements was in the detection-to-containment cycle. First off, Dropzone eliminated MTTA (mean time to acknowledge), which is the time an alert sits in a queue waiting to be investigated and typically the biggest component of MTTR. The Dropzone system automatically surfaced relevant context alongside alerts, allowing analysts to move straight into decision-making instead of jumping between tools. This created faster handoffs and shorter dwell times without sacrificing investigation depth.

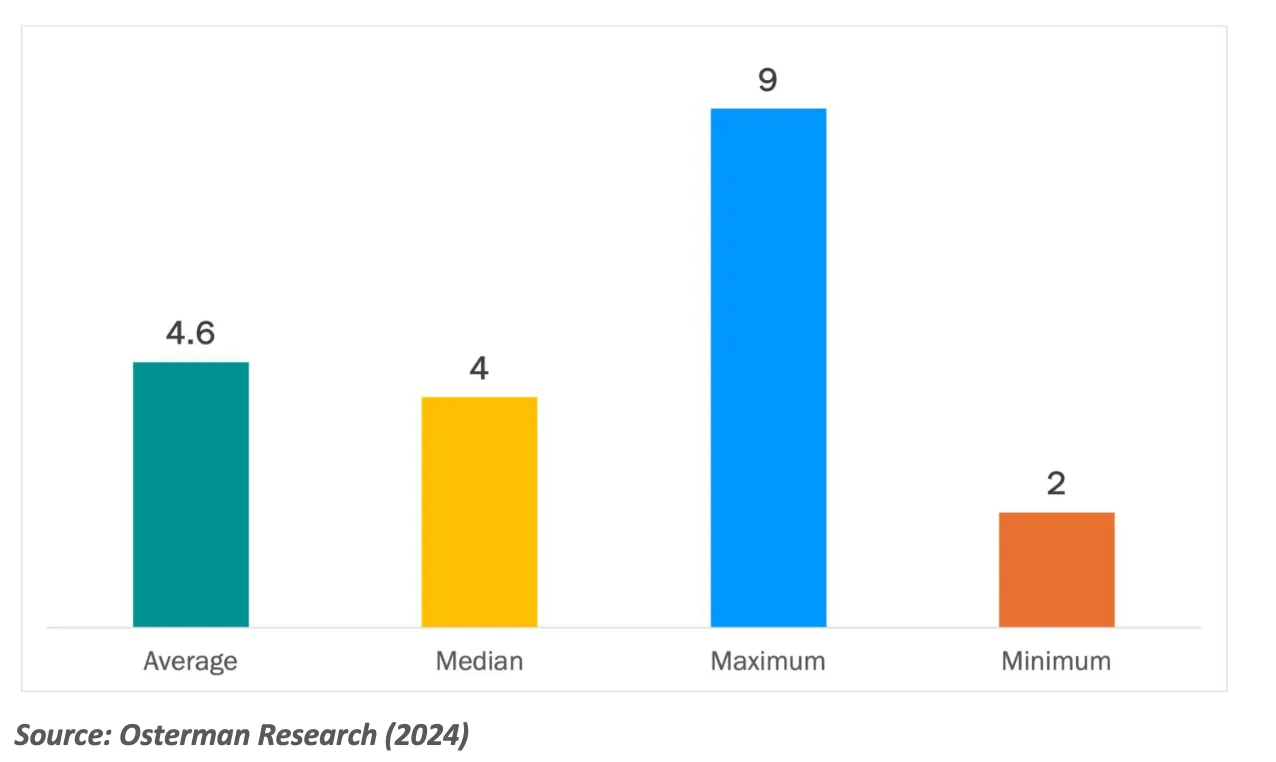

A 2024 Osterman Research survey found that, on average, 4.6 employees are involved in an incident. That’s a lot of context-switching and disruption for employees, not to mention friction added to the response process. AI SOC Agents like Dropzone AI can reduce the need for specialist skills and allow junior analysts to investigate further before escalating.

Triage also became more focused. Instead of presenting every alert equally, Dropzone prioritized based on context, like previous activity from the same host or known behavioral patterns, so analysts could spend their time where it mattered most. That led to fewer low-value distractions and better use of team resources.

Finally, the system handled repetitive tasks like log gathering and correlation. This reduced fatigue and let analysts focus on actual security judgments instead of rote work. For teams under pressure to improve response time and accuracy, these gains aligned directly with AI efficiency mandates already from leadership.

How to Measure and Prove AI Efficiency

The first step is to define a baseline. It's difficult to say whether anything has improved without a clear starting point. That means logging your current incident response time, how many alerts your team handles each week, and how much effort is going into each investigation. Even rough numbers can give you a solid reference to measure against later.

From there, focus on metrics that reflect actual operational improvement. Dwell time is a useful signal; it tells you how long threats stay active before they're resolved. Time-to-triage helps you understand how quickly analysts can assess and route incoming alerts. Analyst touchpoints per incident can show whether manual effort decreases as automation increases.

These numbers should move in the right direction if your AI tools are doing what they claim. If alert investigations are faster and require fewer people to resolve, you can confidently take that to leadership. Measuring change over time, especially across multiple incidents, will help filter out noise and isolate whether the AI is having an impact.

It’s also important to watch for edge cases where automation creates new problems. If you start introducing tools that flood your team with false positives or generate outputs that can’t be explained, that’s not progress. Tracking error rates or analyst rework can help surface these issues early.

Finally, avoid tool sprawl. Adding new systems to check a box often creates more friction than it solves. The goal isn’t to increase the number of dashboards; it’s to make existing workflows faster, cleaner, and more reliable. Focus on the AI that supports that outcome, not the one that just adds features.

Conclusion

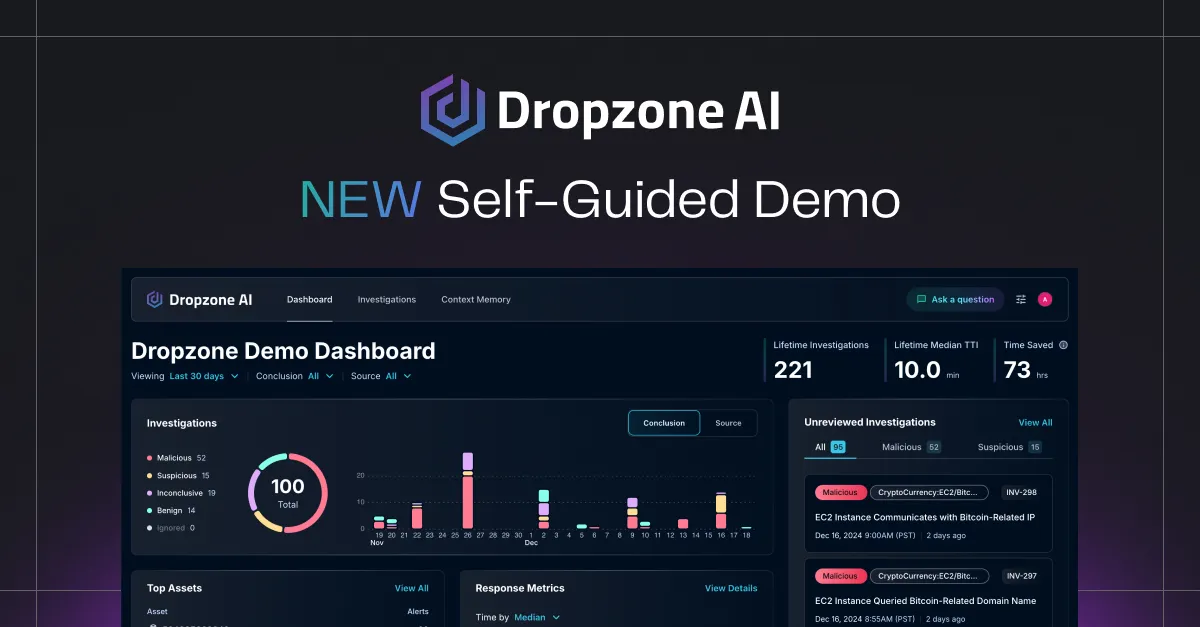

AI efficiency should be measured by what it improves: faster incident response, fewer manual steps, and more targeted triage. If you’ve been asked to use AI, it’s a good opportunity to reassess where your workflows slow down and where automation can deliver something measurable. Teams focusing on real metrics tend to see clearer outcomes and stronger results. Try our self-guided demo to explore what Dropzone AI can do if you're ready to see how that can work in practice.