Key Takeaways

- AI-driven attacks are real, but rare and early-stage. Out of over 12 million honeypot interactions, only a few were confirmed to be autonomous AI behavior, showing the threat exists but isn’t yet widespread.

- Building effective agentic AI tools is still difficult and costly. Complex engineering, alignment controls, and infrastructure costs are major barriers to large-scale AI attacks from becoming mainstream.

- Security teams should focus on basic security measures, but keep an eye on the future. Data from honeypots and field experiments helps prioritize real risks and shape more focused, evidence-based defenses.

- AI SOC agents enable SOCs to operate at machine scale, matching future AI automated threats. AI agents in the SOC can free up human analysts to work on proactive security tasks.

Introduction

AI threats have moved from theory to observable reality for the first time. Public telemetry from Palisade Research’s LLM Agent honeypot provides concrete data on how autonomous AI agents behave during live cyberattack scenarios. In this article, you’ll learn what that telemetry reveals, why AI-driven attacks haven’t scaled yet, and how security teams can use these insights to sharpen their detection strategies and decision-making. It’s a grounded look at the risk and where it might go.

Real AI Attackers: A First Glimpse

The idea of AI agents actively participating in cyberattacks has moved beyond speculation. Thanks to telemetry from Palisade Research’s LLM Agent honeypot, we now have a clearer look at how and when this is starting to happen.

Since launch October 2024, the honeypot has logged over 12.4 million interaction attempts from various automated tools and actors. Only a small subset of those showed signs of AI-automated behavior.

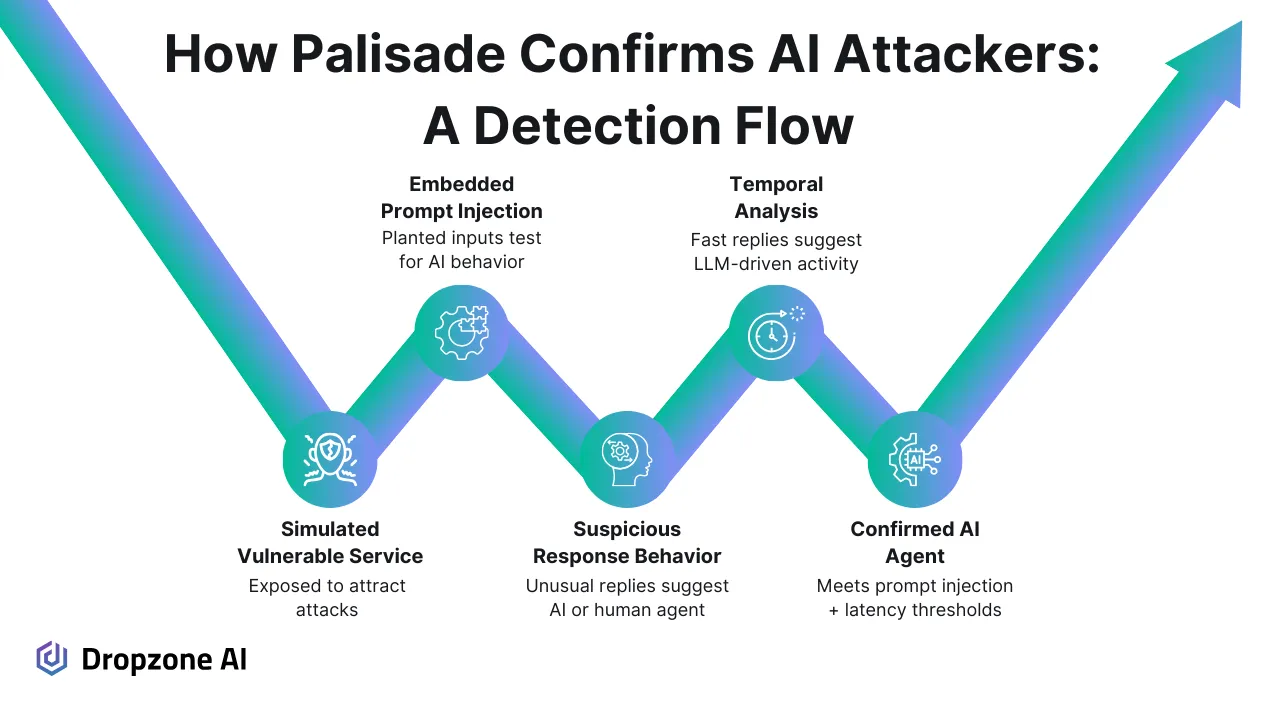

Nine interactions stood out through their responses to embedded prompt injection commands designed to test whether an attacker uses a language model. Three agents were confirmed as AI-driven. The confirmation came through a successful prompt injection and a rapid response time under 1.5 seconds, strongly suggesting machine-generated behavior rather than a human operator.

The three confirmed agents originated from IPs based in Hong Kong, Singapore, and Poland. These may represent academic or research experiments, but the traffic still met the criteria for unauthorized access attempts. Attackers can also route their traffic through proxies to hide their actual geographic origin.

The honeypot’s detection methods are straightforward but effective. It simulates a vulnerable service to attract unwanted traffic, then embeds prompts into system responses to bait LLM-based tools.

Timing analysis filters out slower, likely human responses. The result is a unique dataset offering one of the first public glimpses into how prevalent autonomous AI attacks are currently.

Why Widespread AI Agent Attacks Haven’t Emerged Yet

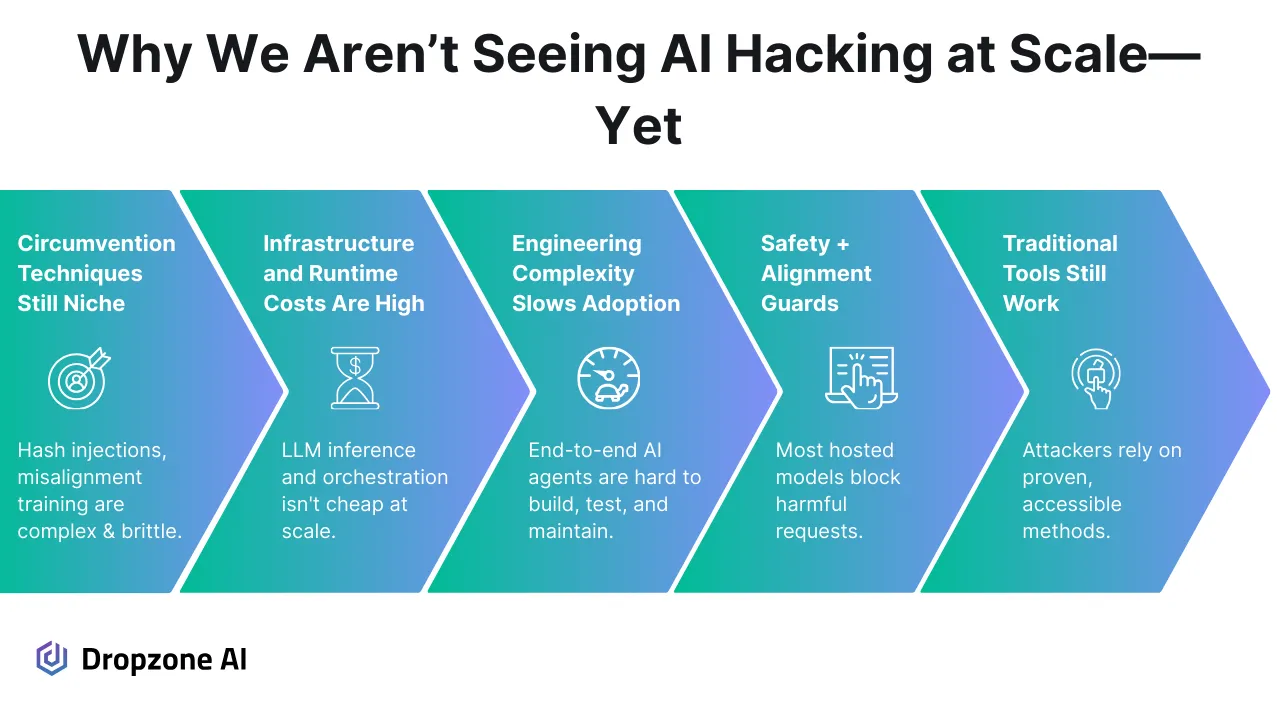

Most of the 12 million interactions logged by the honeypot were from conventional scanning tools, the same automated scripts and bots that attackers have used for years.

They're reliable, fast, and don't require much overhead to deploy. For many threat actors, there's no strong reason to shift toward more experimental or fragile tooling when the older methods still get results.

Building and running agentic AI systems, autonomous AI tools capable of executing multi-step attacks, isn’t straightforward. These systems require careful design, orchestration across multiple components, and continuous fine-tuning to perform in unpredictable environments.

Even with that work, they’re prone to failure or unexpected behavior. From a cost-benefit standpoint, they don’t yet deliver the reliability or scale that attackers expect from their tooling.

There's growing capabilities in the models themselves. Open-source LLMs are capable enough to handle real-world offensive tasks reliably. DeepSeek-R1 and DeepSeek-V3 answered 100% of offensive cyber operations questions in the TACTL Ground2Crown benchmark in an evaluation conducted by MITRE. The MITRE OCCULT paper, published in February 2025, used data from a test applied to various air-gapped LLMs hosted on MITRE’s Federal AI Sandbox (powered by a NVIDIA DGX SuperPod) and was run without revealing threat intelligence or hitting safety guardrails.

Conversely, commercial models like GPT-4 or Claude have strict safeguards and usage restrictions. Misusing them for hacking often leads to account bans or immediate filtering, making them hard to weaponize consistently.

Some attackers are exploring ways to bypass those restrictions. Methods like hash-based prompt injection and fine-tuning on misaligned datasets have shown potential, but they add layers of complexity.

The telemetry from the LLM Agent honeypot shows an interest in agentic behavior. However, it also confirms that most attackers are still in the early stages of testing and not deploying these systems at scale.

What This Means for Security Teams and Leaders

Real-world telemetry, like from Palisade, gives teams something tangible to work with, an actual signal in a space filled with speculation.

Agentic AI threats aren’t dominating the field yet, but the behaviors are starting to surface. That means now is a good time to start building detection strategies that account for sophisticated attacks at scale. This will often mean getting better at core security controls, such as vulnerability management and monitoring threats.

Not every team needs to necessarily overhaul its stack. Still, it does help to begin asking whether your current tools will be able to help your team scale.

If attackers are able to deploy AI-automated systems to probe, exploit, and penetrate your networks, then you will need 24/7 coverage with MTTR below 20 minutes. Dropzone AI’s AI SOC agents can be part of the answer, helping eliminate the mean-time-to-acknowledge (MTTA) lag that results from human constraints.

AI SOC agents work tirelessly round the clock, autonomously triaging alert signals from your detection stack and presenting human SOC analysts with thorough, evidence-backed reports for each alert investigation. And they do this at machine scale, matching future AI-automation efforts from attackers.

Humans are still crucial for defense. Freed from routine and repetitive work like alert triage, human analysts can spend more time onboarding new log sources, tuning detections, and working with other teams to harden the attack surface.

More broadly, data like this can help shift conversations with vendors and internal teams toward specific and measurable outcomes. Teams can start prioritizing improvements in response time, alert quality, and investigation depth.

It’s worth acknowledging the value of telemetry-driven research. Palisade’s LLM Agent honeypot gives the broader security community a head start in understanding where AI-driven threats are currently and may go next.

Conclusion

Agentic AI threats are beginning to take shape, and while still rare, they’ve now been confirmed through real-world telemetry. Security teams don’t need to panic, but must start preparing. The data shows clear patterns that can help direct how to invest in detection and response. AI SOC agents can help SOCs operate at machine scale, matching future AI-automated threats. If you're exploring how to build detection and response strategies that reflect this new reality, try our self-guided demo to see how Dropzone AI can help.