Protecting sensitive data is a non-negotiable priority for any organization, but detecting personally identifiable information (PII) at scale is rarely simple. As data volumes explode and privacy regulations grow more stringent, security teams are under increasing pressure to locate and lock down sensitive fields across sprawling cloud environments. Unfortunately, most existing tools are slow, expensive, and operationally heavy, often requiring full data scans that strain compute budgets and increase exposure risk.

At the Security Frontiers 2025 virtual conference, Kyle Polley offered a refreshing alternative: PII Detective. This open-source, AI-powered tool sidesteps the traditional pitfalls of PII discovery by never touching the data itself. Instead, it leverages large language models to analyze metadata only, making smart inferences about what columns are likely to contain sensitive information based on names, patterns, and structure.

PII Detective is a great example of how security practitioners are using AI to dramatically improve efficiency of day-to-day security operations tasks.

The Problem with Traditional PII Detection

For most organizations, detecting PII means throwing compute at the problem. Legacy tools operate under the assumption that more scanning equals better coverage, relying on brute-force methods like regex sweeps across entire datasets. These scans dig deep into raw data, combing through tables row by row in search of anything resembling sensitive information—names, email addresses, IDs, and beyond.

However, as Kyle Polley pointed out in his Security Frontiers talk, this approach misses the point. “What if the solution isn’t more compute?” he asked. “What if it’s just better context?”

The current model introduces significant challenges. First, it’s expensive. Running intensive data scans across cloud storage platforms like Snowflake or BigQuery can rack up staggering compute bills. Second, it’s noisy. These tools often generate high volumes of false positives, flagging columns with vague names or harmless values as risks, leaving privacy teams with piles of results that require manual review. And third, it’s inflexible. Full-data scans often involve elevated permissions, creating operational headaches and increased risk if something goes wrong.

The PII Detective Approach

Rather than parsing through terabytes of raw data, PII Detective flips the model on its head. Kyle Polley’s tool asks a simple question: what if the information we need is already in front of us in the structure, not the contents?

PII Detective scans only metadata, such as column names, data types, and structural patterns. Avoiding raw data entirely dramatically reduces compute costs and privacy risks. At its core is a large language model trained to infer sensitivity based on naming conventions, schema logic, and contextual cues. For example, it knows that a column named ssn or employee_id is probably sensitive, while invoice_number likely isn’t.

The system doesn’t try to be perfect. Instead, it’s designed to move quickly and cheaply, flagging likely PII candidates and handing them off for human review as a final step. It’s a clean balance: AI handles the bulk, and humans make the call.

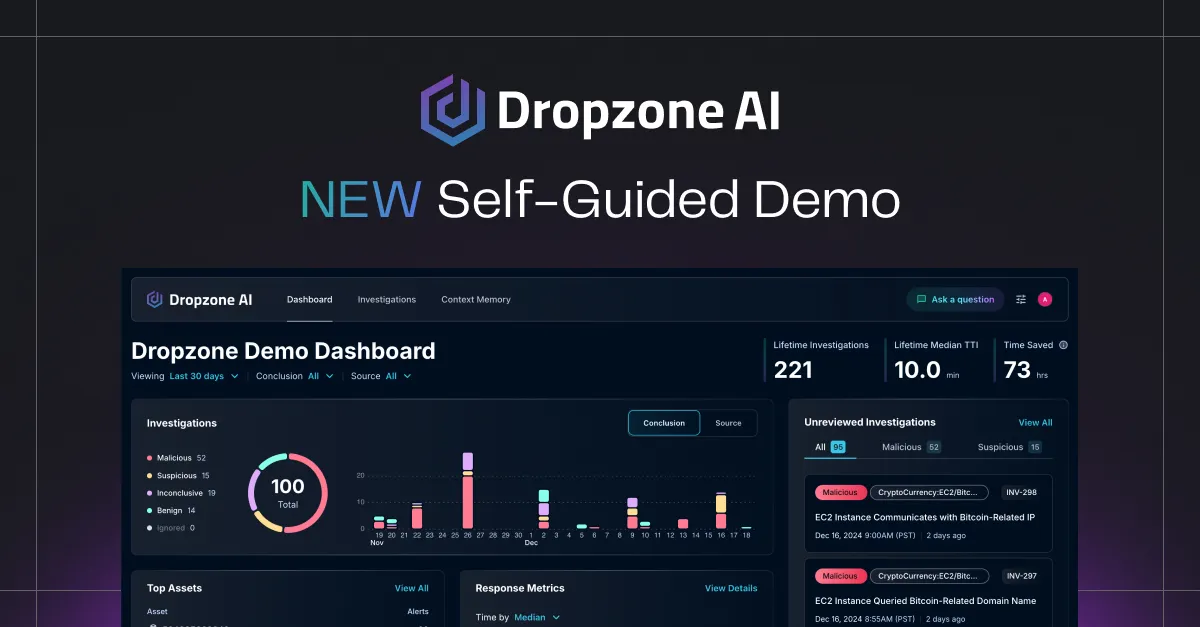

And the efficiency is hard to ignore. In Kyle’s benchmarks, PII Detective could scan thousands of tables for roughly $5 in total compute. No complex infrastructure, no massive cloud bills, just smart inference, and fast iteration, all while respecting the boundaries of data privacy.

Best of all, PII Detective is open-source on GitHub here.

Why It Matters: Practical AI, Not Hype

Kyle Polley’s project captured one of Security Frontiers’ core themes: real tools for solving real problems, built with practicality in mind rather than spectacle.

PII Detective avoided the complexity and cost of full data access by anchoring its logic in metadata and layering on a lightweight, LLM-powered inference model. It’s a reminder that effective AI doesn’t have to be massive. It just has to be smart.

It also embraced a human-in-the-loop design, reinforcing trust and accountability. Instead of trying to eliminate human oversight, the tool builds around it, automating what can be automated and surfacing what needs review. The result? High signal-to-noise precision and a faster and more accurate process than traditional methods.

Perhaps most importantly, PII Detective doesn’t try to reinvent the stack. Its AI logic is composable and can slot into whatever data catalog, privacy tooling, or compliance workflow an organization already has. That flexibility is key, especially when so many enterprise-grade solutions arrive bloated, overpriced, and over-engineered, delivering only marginal gains in exchange for massive effort.

What Teams Can Learn from This

PII Detective is a reminder that simplicity is a feature, especially in a field that too often confuses complexity with capability. The tool delivers useful results with minimal privacy exposure by pairing metadata with LLM inference. It doesn’t overreach, and it doesn’t ask for unnecessary access.

Another key insight of trust in AI comes from explainability and reversibility. PII Detective’s results are auditable, reviewable, and easy to adjust. That makes it not just efficient but usable.

And the approach isn’t limited to PII detection. The same pattern of metadata + LLM + human review has enormous potential across security and compliance, including Tier 1 alert triage and investigation. From automated data classification to alert triage or access reviews, this model offers a path forward that balances automation with oversight.

Lessons Worth Taking Home

PII Detective proves that solving big security problems doesn’t always require big tools. Sometimes, all it takes is a sharper question, a smaller footprint, and the right mix of automation and oversight. It’s the kind of work that quietly changes how teams think, and that’s exactly what Security Frontiers was all about.

If you’re interested in how AI is actually being used to solve real security problems, Security Frontiers is the place to see it in action.