Key Takeaways:

- Traditional threat modeling doesn't scale. Long review sessions and resource gaps make keeping pace with fast-moving development difficult.

- An AI copilot can ease the load. By automating baseline threat models, engineers gain a head start while staying in control of final outputs.

- Data quality is critical. Clear diagrams and well-structured inputs directly impact the accuracy of AI-generated results.

- Privacy matters. Building the copilot in-house on AWS kept sensitive product data secure and simplified compliance and audit needs.

- The future is self-service. Developers will generate first-pass threat models with AI, while security engineers guide, validate, and refine.

Introduction

Threat modeling sits at the heart of secure software development. It's where teams pause to ask the hard questions:

- What are we building?

- What could go wrong?

- How do we protect against it?

The answers shape design decisions and reduce risk, but anyone who has been through the process knows how heavy it can feel. Sessions stretch for hours, security engineers are outnumbered by developers, and every new product or feature means starting over again.

The result is a practice that's vital for reducing vulnerabilities but notoriously time-consuming and difficult to scale.

That's the challenge one product security engineer set out to solve. Drawing from experience supporting multiple teams across a fast-moving enterprise, Murat Zhumagali began experimenting with an AI-powered copilot, an assistant designed to take on the repetitive legwork of threat modeling while keeping humans in the loop for oversight and decision-making.

The idea wasn't just to automate for efficiency's sake. It was about rethinking how generative AI could make threat modeling more practical and accessible:

- Fast enough to keep pace with development cycles

- Rigorous enough to deliver results security teams can trust

This story explores how copilots built with GenAI are beginning to transform a process long seen as a bottleneck into something far more scalable.

What Are the Challenges of Traditional Threat Modeling?

Threat modeling often follows the STRIDE framework:

- Define the scope

- Identify threats

- Map mitigations

- Record their status

It's a structured process that quickly becomes a drain on time and resources.

Each STRIDE review requires long sessions with developers, dissecting architectures and data flows in detail. For many teams, a single security engineer supports 30–40 developers, making deep reviews hard to sustain.

The problem compounds in companies that grow through mergers and acquisitions, as each new business brings its own tech stack, leaving security teams juggling multiple environments simultaneously.

The outcome is a scalability bottleneck. Threat modeling remains critical but struggles to keep up with modern development velocity. It risks becoming:

- A bottleneck that slows releases

- A check-the-box exercise that overlooks real risks

How Was the AI Copilot Built?

Faced with these limits, Murat set out to see whether a copilot could help. Part of the motivation was personal: catching up with the wave of GenAI tools and separating genuine value from hype. But there were practical concerns too.

Off-the-shelf copilots require sending sensitive data to external providers, a non-starter for product security. The solution must stay private, running inside the company's environment.

The prototype came together with a simple stack:

- Streamlit interface provided the front end

- AWS Bedrock was the backbone

- Claude 3.7 powered the reasoning

- Retrieval-augmented generation (RAG) using Titan embeddings, OpenSearch, and S3 bucket

The workflow was straightforward:

- User enters product name, description, and architecture diagram

- Copilot builds tailored prompt using STRIDE and OWASP Top 10 principles

- Model generates draft threat model with components, threats, severity, impacts, and mitigations

- Human reviewer validates results and adjusts where needed

The AI took on the repetitive legwork, while engineers kept final say. The goal wasn't to replace security expertise but to provide a strong starting point that made reviews faster, more consistent, and easier to scale.

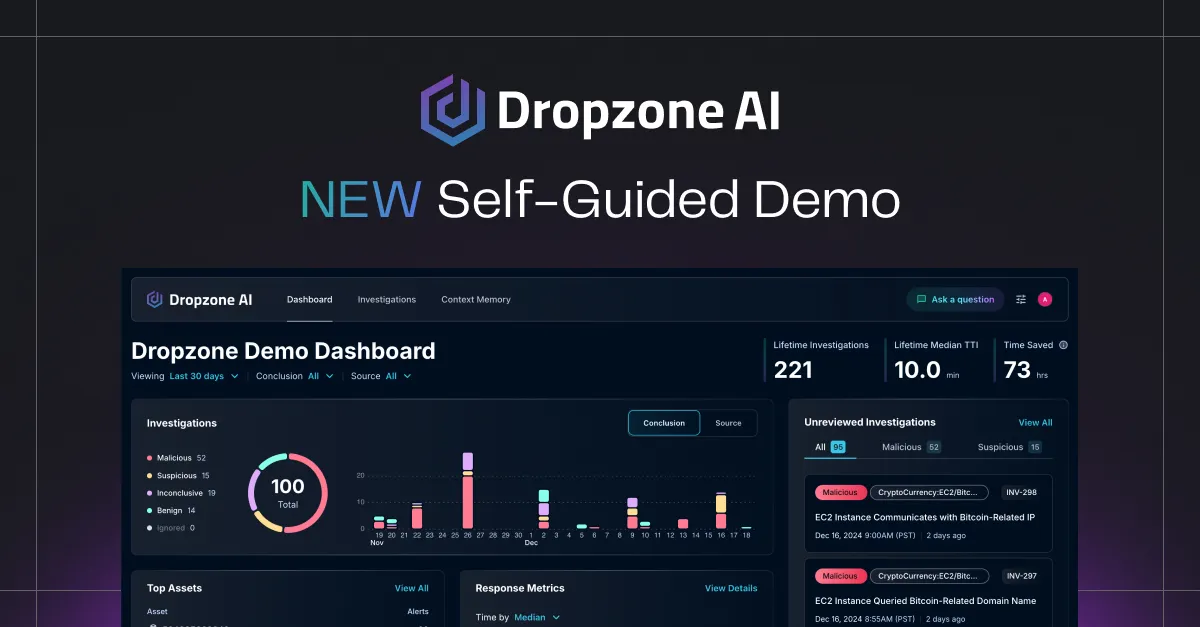

Watch Murat’s presentation at the 2025 Security Frontiers event:

What Lessons Were Learned from the Pilot?

The first runs of the copilot showed just how important iteration would be. In its earliest form, relying only on prompt-stuffing, the outputs were barely usable, maybe five percent relevant to the actual product.

Adding more context into the prompts improved things, nudging accuracy into the 30–35 percent range, but still left too much noise. The real breakthrough came with retrieval-augmented generation.

Accuracy progression:

- Prompt-stuffing only: 5% relevant

- Enhanced prompts: 30-35% relevant

- RAG implementation: 40-45% relevant

- Claude 3.7 upgrade: 50-55% relevant

By storing artifacts in S3, embedding them, and pulling in context through OpenSearch, accuracy climbed significantly. When Claude 3.7 was introduced, it added another bump, bringing results closer to the target range.

Along the way, the team uncovered several practical lessons:

- Garbage in, garbage out: If diagrams were incomplete or poorly formatted, the model's reasoning followed suit

- RAG scales better: Far more effective than giant prompts, especially as the knowledge base grew

- Diagram conversion matters: Converting complex architecture maps into DSL format improved results, though it introduced more prep work

For developers, the outputs were good enough to serve as a baseline, something they could quickly review and refine. For security engineers, however, the bar is higher.

Until the system consistently hits closer to seventy percent accuracy, it will remain a supplement rather than a full shift in threat modeling. Still, the steady gains showed that an AI copilot could move from experiment to genuinely useful tool with the right data and workflow.

Why Does In-House Deployment Matter?

One reason the project stayed viable was the decision to build it in-house. Privacy and compliance concerns ruled out sending sensitive product data to external LLM providers.

By keeping everything inside AWS, where the company was already hosting customer workloads, the team could ensure that models ran within their own security perimeter. No designs, diagrams, or data flows ever left their environment.

This approach offered more than peace of mind. It gave the team direct control over:

- Which models to use

- How often to upgrade them

- How to tune context retrieval for their needs

They weren't locked into someone else's roadmap or forced to accept opaque changes in performance. Each improvement, from embedding strategies to diagram handling, could be tested and adjusted on its own terms.

The benefits also extended to governance. In the event of a compliance review or legal audit, it was far easier to show:

- Where data lived

- How it was processed

- Who had access

Instead of debating whether a vendor might have stored training data or logged requests, the team could point to a contained system operating entirely within their AWS footprint. For security engineers, that clarity wasn't just a technical win; it was a strategic one, ensuring that innovation didn't come at the expense of trust or accountability.

What Comes Next for AI-Powered Threat Modeling?

The next phase is about refinement. First, the knowledge base needs to handle multimodal inputs so diagrams no longer require heavy manual prep. Feeding in past threat models will also help fine-tune accuracy and consistency, giving the copilot more of the context security engineers rely on.

Model choice is another area to explore. Testing alternatives to Claude will ensure flexibility and reveal performance trade-offs. At the same time, adding feedback loops where engineers can adjust severity, mitigations, or scenarios will make the tool more interactive and trustworthy.

Rollout will begin with simpler products, proving value quickly before tackling more complex systems. From there, the vision is broader:

- Self-service threat modeling with developers taking the first pass

- Security engineers refining results and ensuring quality

- Faster iteration cycles without sacrificing rigor

Final Thoughts

Threat modeling remains one of the most valuable practices in security, but it simply won't scale if we rely only on traditional methods. The hours required, the imbalance between security engineers and developers, and the growing complexity of modern environments all point to the same conclusion: we need new approaches.

AI copilots won't replace the expertise of security professionals, but they can change the balance of work. By providing a reliable baseline, engineers can focus on higher-value tasks:

- Refining mitigations

- Validating edge cases

- Guiding strategy

At the same time, developers gain a self-service tool that helps them think about threats earlier and more often, without waiting on scarce security resources.

The future of threat modeling may not be about sitting in marathon review sessions, but about giving every team access to an AI partner that makes the process faster, more consistent, and easier to adopt.

Security engineers remain in the loop, shaping outputs and ensuring quality, but the heavy lifting shifts to automation.

If you'd like to see more stories like this and hear directly from the people building the next generation of security tools, catch the full session and others on Security Frontiers.