The AI Hallucination Everyone Fears

"The AI just made that up."

It's the statement that makes many CISOs skeptical of AI-powered security tools. They fear an AI SOC analyst drawing a conclusion that doesn't match the evidence. The logs say one thing, but the AI confidently states another.

This is the hallucination risk that keeps security leaders from fully trusting AI systems. But what we’ve found building the Dropzone AI SOC analyst is that the AI very rarely gets the conclusion wrong because of hallucinations.

When the AI agent gets a conclusion wrong, it’s doing exactly what any analyst would do with incomplete information: drawing the most logical conclusion from the data it could see. The problem wasn't the AI's reasoning. The problem was that the AI was missing critical context.

This distinction matters because it reveals something fundamental about building reliable AI systems for security operations: accuracy isn't just about the model; it's about the engineering around it.

Why Does "Just Add AI" Fail for Security Operations?

There's a dangerous misconception that working with LLMs is simple: just "throw an LLM at the problem" and let it figure things out. In reality, building a reliable, accurate AI system requires substantial engineering to ensure non-deterministic models operate in a predictable manner.

Shopify CEO Tobias Lütke captured this perfectly in a tweet last summer: "I really like the term 'context engineering' over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM."

This fits with what we’ve found building the Dropzone AI SOC analyst. Context engineering isn't just a better term (it's a better framework for thinking about AI reliability).

Prompt engineering focuses on how you ask the question: "Write this in a certain style" or "Format the output this way."

Context engineering focuses on what information the AI has available: "Here's the complete dataset, here's how it was collected, here's what you're looking for, and here's why it matters."

For security operations, this distinction is critical. Get the context wrong, and even the most sophisticated model will arrive at inaccurate conclusions.

What Actually Went Wrong: Was It Really a Hallucination?

Let me walk you through a bug that we found when building a new integration.

The Scenario

We were building a new integration for Dropzone AI that analyzes security logs and communication data. The system needed to investigate potential threats by examining network traffic between specific IP addresses.

The Symptom

During testing, the AI started generating conclusions that didn't match the evidence. It would see network traffic on unexpected ports and flag suspicious communication patterns that didn't actually exist. Initially, this looked like a textbook case of AI hallucination.

The Root Cause

But when we dug deeper, we found something interesting: The AI wasn't hallucinating. It was drawing perfectly reasonable conclusions from the data it received. The problem was that the data itself was misleading.

Here's what happened:

The tool that generated database queries to pull network logs had a bug. Instead of using an AND statement to find communications between two specific IP addresses, it incorrectly used an OR statement.

That OR changed everything.

The incorrect OR query gathered all logs from the IPs communicating with any address. This generated a multi-megabyte response that needed to be chunked up.

How Context Gets Lost: A Chunking Problem

Here's where context engineering becomes critical.

Because the log data was so large (remember, it included far more traffic than we needed), our system couldn't send it all to the LLM in a single API call. Our system had to chunk it (divide the data into smaller pieces for the LLM to process sequentially).

Here’s where the key failure in our scaffolding occurred: The original query was only prepended to the first chunk of the data response, providing essential context for the LLM. However, when the system began processing the subsequent chunks, the LLM did not have the original query—crucial context.

Think of it like reading a detective novel, but someone tore out the pages and is handing them to you one chapter at a time. You can understand each chapter individually, but you might miss connections between them.

Our system worked like this:

Chunk 1: Included the original query + first portion of log data

Chunk 2: Second portion of log data (but no query information)

Chunk 3: Third portion of log data (but no query information)

Chunk 4: Fourth portion of log data (but no query information)

The LLM knew how the data was collected when processing the first chunk. But in chunks 2, 3, and 4, it had lost that critical context.

Without knowing "this data came from an OR query that mixed unrelated traffic," the LLM did exactly what it should do: analyze the data it could see and draw logical conclusions. Those conclusions were wrong, but they were reasonable given the incomplete information. To be fair, any human analyst could make the same mistaken given the same limited context.

This wasn't a hallucination. This was a context engineering failure.

To fix the issue, we modified our chunking algorithm to always include the original query at the beginning of every data chunk (not just the first one).

Now our system works like this:

Chunk 1: Original query + first portion of log data

Chunk 2: Original query + second portion of log data

Chunk 3: Original query + third portion of log data

Chunk 4: Original query + fourth portion of log data

Even if the data requires 20 chunks, chunk 15 still begins with the complete context of how the data was retrieved. The LLM always knows: "This is OR query data, which means these logs might not all be related."

How Do You Engineer Reliable AI Security Systems?

Context preservation is one technique. Another powerful approach is reducing the scope of what we ask the LLM to do.

Instead of asking a single enormous prompt to "investigate and report on this security incident," we break investigations into smaller, discrete tasks:

- "Summarize the logs in this chunk"

- "Identify the top three anomalous IP addresses"

- "Determine if this authentication pattern is suspicious"

This compartmentalization serves two purposes:

- Reduces complexity: Smaller, focused tasks are easier to execute accurately

- Enables pre-training: We can train specialized agents for specific investigation tasks, improving consistency

This is one reason Dropzone AI uses a multi-agent architecture based on the industry-standard OSCAR investigative framework. By breaking investigations into discrete phases (Obtain, Strategize, Collect, Analyze, Report), we ensure each step has appropriate context and clear objectives.

Building Trustworthy AI Systems: Beyond Context Engineering

Context engineering is fundamental, but it's just one component of building AI systems that security teams can trust.

Quality Control and Validation

Ensuring accuracy is a continuous effort. Our Dropzone AI quality control program tracks key metrics like false positives and false negatives. We understand that customers need to trust the accuracy of the system to realize value.

Trust is built through:

- Continuous evaluation: Regular testing against known scenarios

- Transparency: Showing how conclusions were reached

- Evidence preservation: Maintaining the full investigative trail

Transparency Through Action Graphs

For every Dropzone AI investigation, you can view an action graph that shows exactly how the system planned and executed the investigation. You can see:

- What queries were run

- What data sources were consulted

- How evidence was analyzed

- Why specific conclusions were reached

This isn't a "black box" that tells you "trust me." It's a transparent system that shows its work.

This transparency serves multiple purposes:

- Validation: Security teams can verify the reasoning

- Training: Teams can learn from AI investigation techniques

- Debugging: When something goes wrong, you can trace exactly where

- Compliance: Audit trails for regulatory requirements

The Scaffolding That Makes AI Reliable

We view the scaffolding (the deterministic logic, data flow, and context management) as the engine that harnesses LLM power and makes it trustworthy for production security operations.

This includes:

- Data validation: Ensuring inputs are clean and complete

- Context management: Preserving critical information across system components

- Task decomposition: Breaking complex work into manageable pieces

- Quality gates: Checkpoints that validate outputs before proceeding

- Error handling: Graceful failures when context is insufficient

Key Takeaways

For Developers Building with LLMs

If you're building AI-powered systems, particularly for security operations, here are the key principles:

1. Context Is More Important Than the Model

The most advanced LLM will fail without proper context. Invest heavily in ensuring your system provides complete, relevant information for every task.

2. Preserve Context Across Boundaries

When data moves between components (through chunking, APIs, or processing stages), context can get lost. Explicitly engineer context preservation into your architecture.

3. Reduce Task Scope for Better Reliability

Instead of asking AI to solve enormous problems, break work into smaller, focused tasks. Smaller scope means better context control and more consistent results.

4. Make Failures Visible

When something goes wrong, you need to know where and why. Build transparency and observability into your system from the start.

5. Pre-Train for Repetitive Tasks

If your system performs the same types of tasks repeatedly, invest in specialized training for those functions. Generic models are powerful, but specialized training improves consistency.

For Security Leaders Evaluating AI

When evaluating AI vendors for security operations, ask these questions:

1. Can you show me how your system stores and updates context?

Vendors should be able to explain their context engineering approach in detail. If they can't, that's a red flag.

2. Can I see the investigation process, not just the results?

Transparency matters. You should be able to validate how conclusions were reached.

3. What's your approach to quality control and validation?

Accuracy claims should be backed by a mature quality assurance process, not anecdotes.

4. How do you handle cases where context is insufficient?

The system should gracefully handle situations where it doesn't have enough information to make accurate conclusions.

Conclusion: Engineering Reliability Into AI

When AI systems fail in security operations, the common response is to blame the model: "It hallucinated" or "AI isn't ready for security." But in our experience, most failures aren't model limitations, but are engineering problems.

In the example above, the bug we encountered looked like a hallucination, but it was actually:

- A query generation error (deterministic bug)

- That created misleading data

- Which was processed without preserving context

- Leading to reasonable conclusions from incomplete information

The fix wasn't a better model. The fix was better context engineering.

For developers building with LLMs: Invest deeply in the scaffolding around your models. Context preservation, task decomposition, and transparent design aren't optional extras (they're fundamental requirements for reliability).

For security leaders evaluating AI: look beyond the model itself. Ask about context engineering, transparency, and quality control. The difference between a trustworthy AI system and an unreliable one often comes down to the engineering work you can't see.

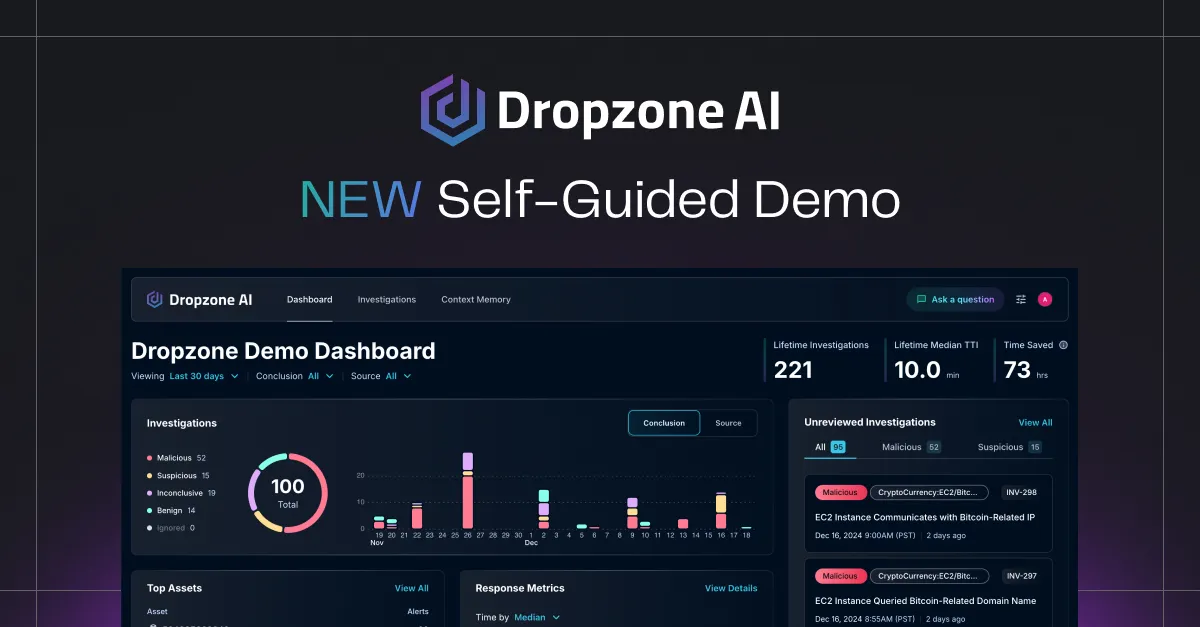

Want to see Dropzone AI's context engineering in action? Try our self-guided demo to explore how our system maintains context and transparency throughout security investigations.