AI SOC agents are entering a new phase. Organizations like Zapier, Mysten Labs, and Pipe have deployed them in production to handle real alert volume and reduce workload on human analysts. As this shift happens, one capability is coming up consistently in conversations with mature security teams: coachability.

Coachability refers to how easily security teams can guide, tune, and customize the behavior of an AI SOC agent. Not by writing brittle if/then logic, scripting Python, or retrofitting legacy playbooks, but by using AI reasoning capabilities. It's the difference between an agent that simply "works" and an agent that actually works the way your SOC works.

And as AI SOC agents take on more responsibility, this capability is becoming essential.

Why Does Coachability Matter for AI SOC Agents?

Every organization has its own environment, naming conventions, business logic, threat model, and operating procedures. Traditional automation struggles with this diversity because it requires hard-coded steps, rigid rules, and constant maintenance. AI SOC agents break that pattern. They can be guided like people, not programmed like tools.

A helpful analogy: think of an AI SOC agent as a highly capable new hire on your team. They may enter the SOC with years of expertise, deep technical knowledge, and a strong understanding of investigation patterns. But even your best new analyst cannot contribute effectively until they understand:

- Your environment and infrastructure

- Your organizational norms and culture

- Your risk tolerance thresholds

- How your team classifies events

AI SOC agents work the exact same way. They are extremely powerful out of the box, but to operate at their full potential, they need onboarding and continued coaching.

This idea is intuitive in human terms, and it's becoming just as intuitive for teams evaluating AI SOC tools.

What Does Coachability Mean in AI Security Operations?

Coachability breaks down into a few practical AI SOC agent capabilities that teams are now explicitly asking for during evaluations. These are no longer "nice to have" features. They are core requirements to perceive the most value out of your AI SOC agents.

How Does Context Memory Improve AI SOC Agent Accuracy?

For an AI SOC agent to produce consistently accurate results, it must understand the environment it operates in. That includes:

- Business-specific applications

- Organization-specific false positive scenarios

- Internal naming conventions

- Exceptions that would otherwise look suspicious

- Organizational nuances that shape how alerts should be handled

This knowledge lives in context memory. This context makes the AI SOC analyst more accurate in its investigations. It allows the system to learn on the job and remember details that improve future work.

But storing knowledge isn't enough. The system must also:

- Keep context memory up-to-date

- Reconcile conflicting entries

- Validate that context memory items are legitimate

That's why Dropzone AI has built features so that organizations can:

- Review and edit entries

- Manage the full history of changes

- Apply role-based access control (RBAC) so only the right users can add or modify memory

- Approve new entries before they influence investigations

These controls give teams confidence that the agent's environment-specific "knowledge" remains accurate, consistent, and aligned with business requirements.

In human terms, this is the digital equivalent of maintaining a clean, well-governed internal wiki for a new hire. One that tells them how your organization works and who they can trust as a source of truth.

A lot of this context the AI can glean itself by reading through Jira tickets or existing wikis and documentation, but crucial up-to-date details will still need to be input into the system.

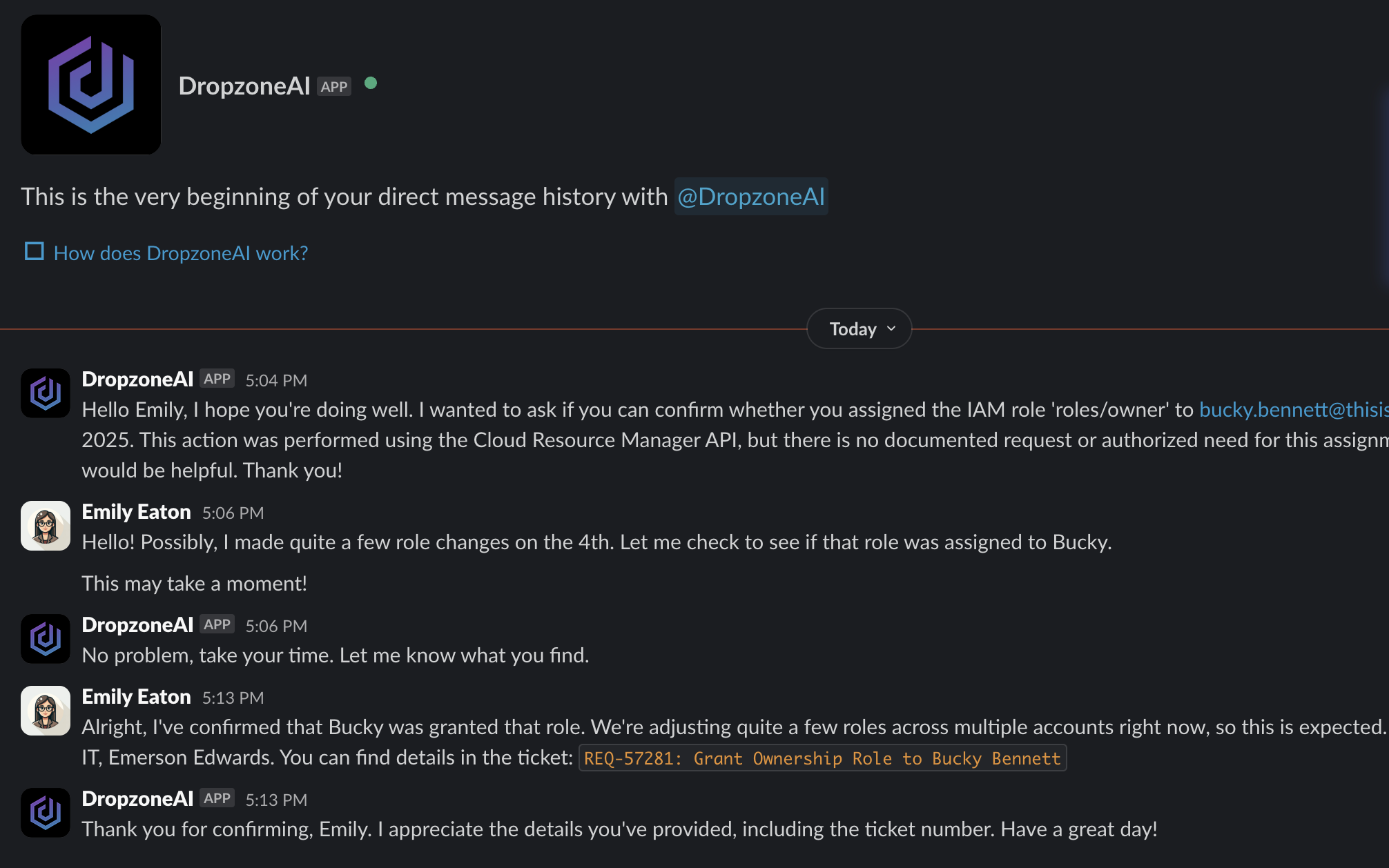

Dropzone's AI Interviewer feature is an example of how crucial context can be gathered from people's brains and input into the system. The Dropzone system decides when an alert investigation needs extra details from a user and then can reach out to the user to ask them clarifying questions via Slack or Microsoft Teams, such as "Did you mean to grant these privileges to this account?"

Sometimes crucial context is in people's heads. Features like Dropzone's AI interviewer can ask users questions in Slack and Microsoft Teams to extract that information.

How Can Teams Encode SOPs Without Writing Code?

Security teams have established ways of handling specific alert types through:

- Standard operating procedures (SOPs)

- Investigation runbooks

- Alert-specific playbooks

These documents encode patterns and conventions that reflect their environment and priorities.

In some cases, this is to ensure that alerts are consistently and thoroughly investigated by analysts. As an example, Andrew Jerry, our Lead SOC Automation Engineer at Dropzone, wrote a blog post that helps you understand what a runbook for a phishing alert investigation might include.

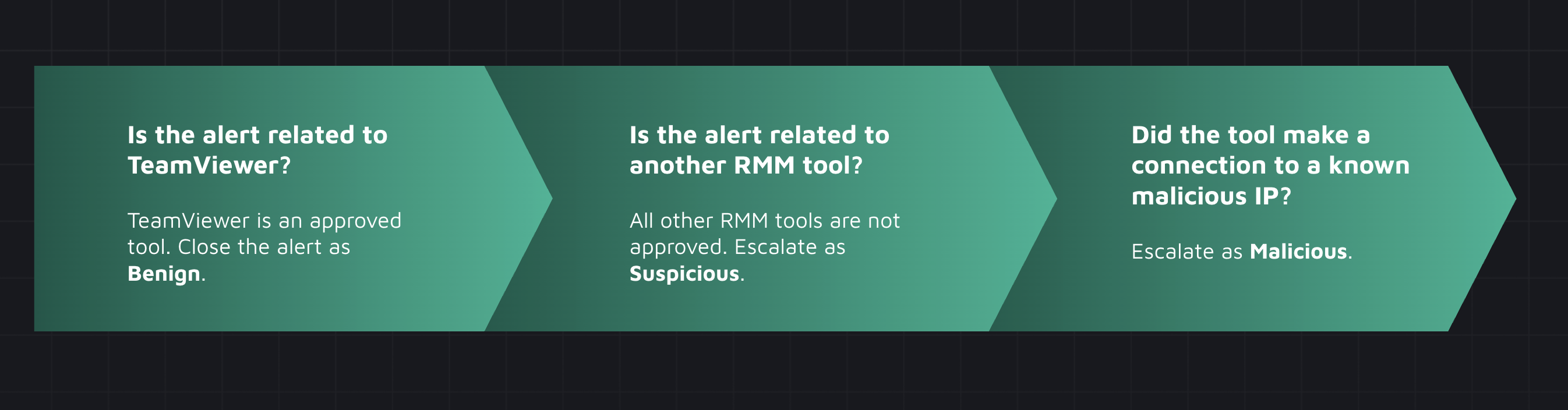

AI SOC agents can follow those same organization-specific rules. Custom instructions in Dropzone AI allow teams to easily provide specialized instructions to an agent, on top of the expertise it already knows:

- How to classify alerts

- When an alert type is considered benign

- What conditions should trigger an automated containment action

- How to escalate findings

- Which events deserve additional scrutiny

Instead of writing logic, teams can instruct the AI SOC agent to weigh certain criteria in their analysis of the disposition of an alert. The AI interprets the rule, understands the intent, and applies it in context.

This is how human analysts learn and follow instructions too: You explain the reasoning, not the syntax.

What Governance Features Should AI SOC Agents Include?

As AI SOC agents assume greater autonomy, teams want confidence, auditability, and predictable behavior. These are enterprise-ready features that you will find in mature AI SOC analyst products such as Dropzone AI:

- RBAC for all configuration and coaching functions

- Approval workflows for adding or modifying memory

- Audit logs that track what changed and why

- Visibility into the agent's reasoning and decision path

These controls help maintain consistency, build trust, and ensure the AI's evolution aligns with organizational standards.

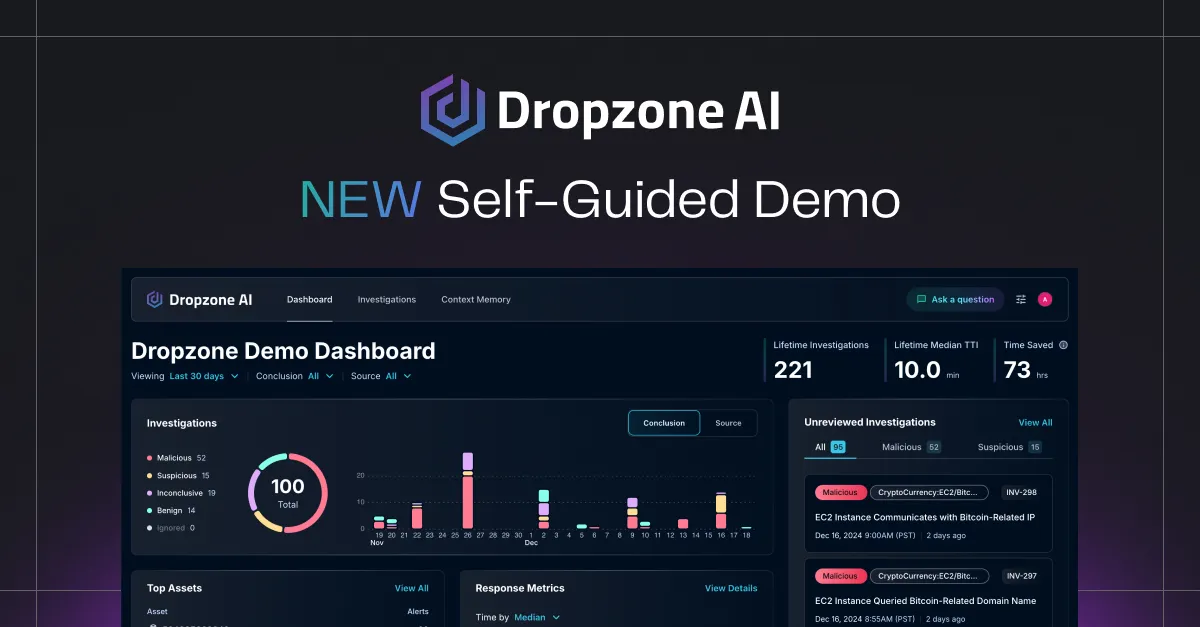

How Does Coachability Drive SOC Performance Outcomes?

Coachability in an AI SOC analyst drives real operational outcomes:

- Higher investigation accuracy because the AI understands the environment

- Fewer false positives as repeated benign patterns are learned

- Faster resolution times because SOPs and coaching that eliminates ambiguity become part of the agent's behavior

- Greater consistency across analysts, shifts, and teams

- Reduced manual work because misclassifications don't repeat and follow-on actions such as escalations and containment can be automated with greater confidence

In short, coachability turns the AI SOC agent from a generic tool into a teammate who understands the organization.

What Should Buyers Evaluate When Selecting AI SOC Agents?

As production deployments of AI SOC agents increase, organizations are demanding more control and enterprise-ready features. At Dropzone, we've seen this in the most sophisticated teams, such as Fortune 500s and advanced MSSPs. They're asking for:

- Fine-grained context memory management

- Approval gates for context knowledge updates

- RBAC for all coaching capabilities for auditability

- Investigation instructions expressed in natural language, not code

- Tunable investigation strategies

- Alignment with internal SOPs and business rules

These requirements are becoming a key part of RFPs, proofs of concept, and bake-offs.

If you're evaluating AI SOC agents today, include these questions in your selection criteria:

- Can the agent be coached through natural language, or does customization require code?

- Can you review, edit, and approve context memory items? Is there a mechanism to keep context memory clean and up to date?

- Is there RBAC for all these features so that you can control and track who makes changes?

- Can you encode SOPs directly into the agent's workflow?

- Can you define custom investigation strategies that reflect your team's practices?

- How transparent is the agent's reasoning, and can you audit its behavior?

In other words: Can this agent operate like a well-trained member of the SOC? That's the goal of the Dropzone AI SOC analyst.

Conclusion

Coachability is becoming the defining capability for AI SOC agents. As organizations adopt these systems to handle more of their alert workload, they need agents that can learn, adapt, and reflect the unique characteristics of their environment.

Even the most skilled analyst needs onboarding and context to perform at their best. AI SOC agents are no different. Skills matter, but context unlocks true effectiveness.

The next generation of SOC automation won't be defined by who has the biggest model or the most integrations. It will be defined by who gives teams the ability to coach their agents into high-performing teammates. Dropzone AI already provides these capabilities, informed by the needs of organizations running AI agents at scale today.