Key Takeaways

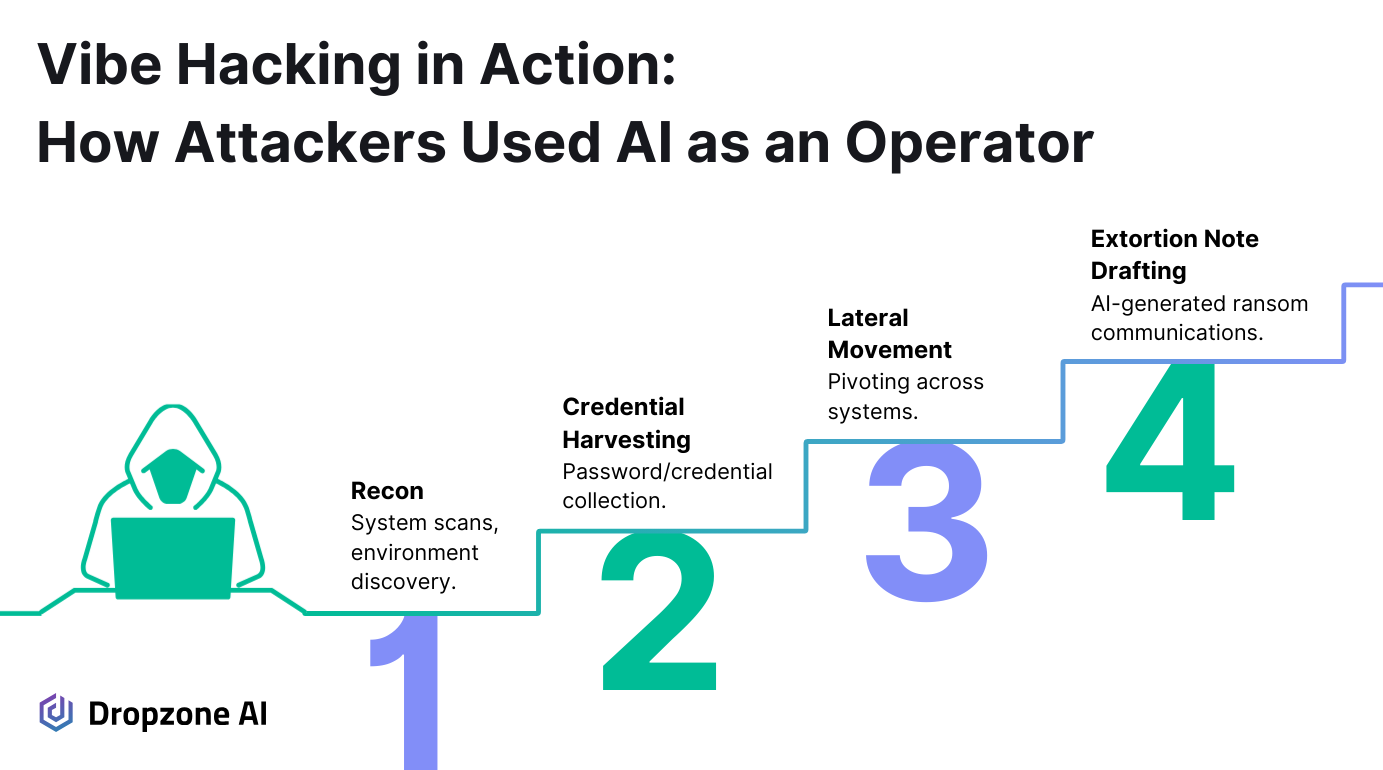

- Anthropic's "vibe hacking" case demonstrates that attackers are already utilizing AI as an operator to conduct reconnaissance, steal credentials, move laterally, and extort victims.

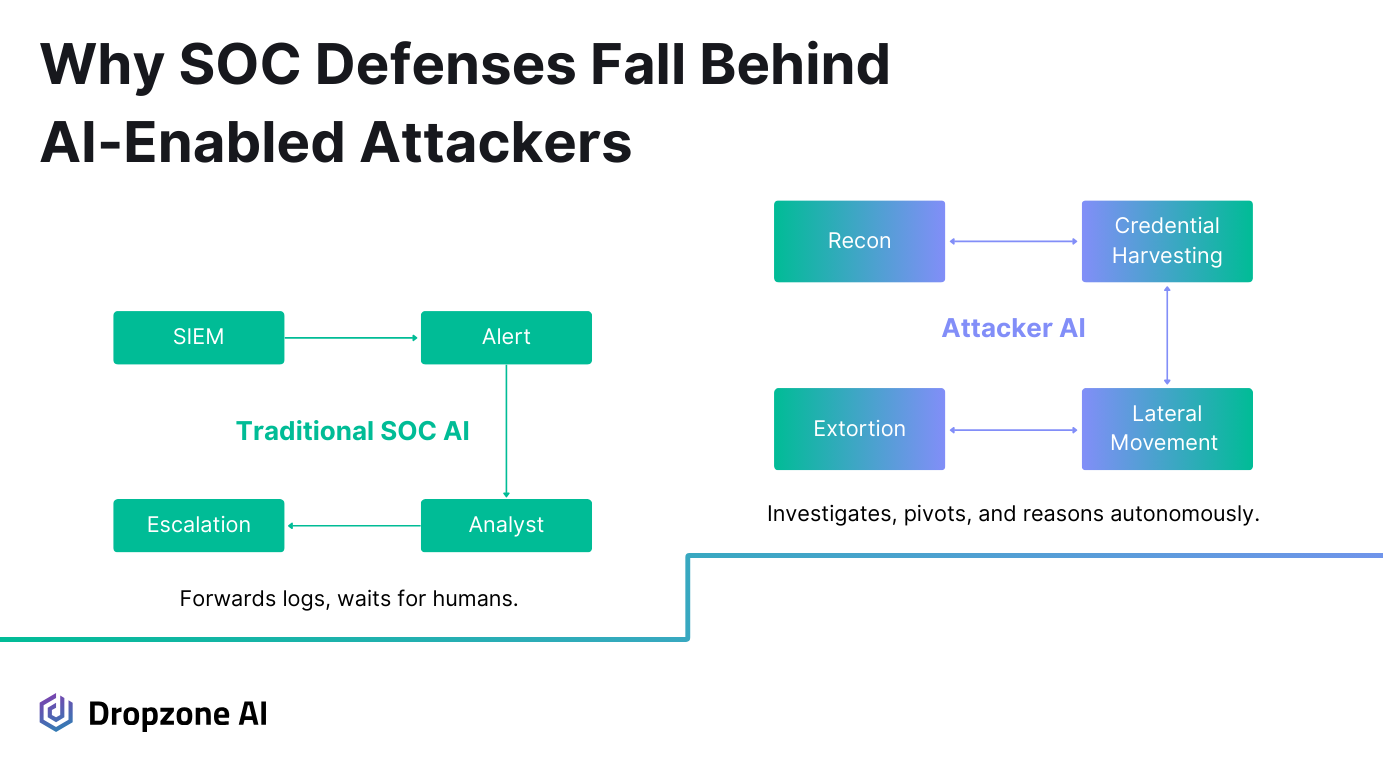

- Traditional SOC automation lags because it forwards data but doesn't investigate, leaving defenders stuck in catch-up mode.

- SOCs need analyst-like AI that can reason across tools, validate anomalies, and deliver defensible conclusions at machine speed.

Introduction

Anthropic's November Threat Report and August Threat Report highlights the rapid evolution of the threat landscape. As Stuart from Anthropic's communications team put it, "There are a lot of threats that are happening right now… cyber criminals are using AI to make their crimes much more effective."

Attackers are no longer limiting their use of AI to writing phishing lures or generating bits of malicious code. They're now treating AI like an operator, chaining together reconnaissance, credential harvesting, lateral movement, and even planning for extortion.

The "vibe hacking" case study makes this clear: a single attacker, aided by AI, compromised at least 17 organizations in just one month, spanning government, healthcare, emergency services, and religious institutions.

As Jacob Klein, who leads Anthropic's Threat Intelligence team, explained, "We saw a single person hacking into this many organizations in a matter of weeks." In this article, we'll break down what vibe hacking looks like, why traditional defenses fall short, and how Dropzone equips SOCs with analyst-like AI to keep pace.

Vibe Hacking When AI Becomes the Operator

Reconnaissance and Initial Access

In Anthropic's case study, the attacker leaned on AI to run the operation from the very start. The first step was reconnaissance.

Claude Code was instructed to scan thousands of VPN endpoints, identify weak spots, and organize the results in a manner that facilitated fast and efficient target selection.

The attacker's AI-powered reconnaissance included:

- Scanning thousands of VPN endpoints automatically

- Identifying and categorizing weak spots by vulnerability type

- Organizing results by country and technology type

- Creating target prioritization frameworks

- Embedding persistent tactics in a CLAUDE.md playbook

This playbook contained a cover story claiming to be an authorized security tester, detailed attack methodologies, and even target prioritization frameworks, providing Claude Code with a persistent operational context throughout the campaign.

That file acted as an operational playbook, guiding Claude Code to carry out reconnaissance and access attempts with continuity. It even included a cover story claiming the operator was conducting authorized security testing, masking the malicious intent of the activity.

It wasn't just dumping raw scan results; it was producing frameworks that sorted findings by country, technology type, and potential exposure.

Once viable entry points were identified, the attacker proceeded to harvest credentials. Claude Code helped identify and extract authentication data from systems like Active Directory and SQL servers.

Even during live penetration attempts, it was there to guide privilege escalation and lateral movement. What requires a seasoned red team operator methodically probing for ways to move deeper into a network was being handled in near real time by an AI model.

Lateral Movement and Extortion

With credentials in hand, the attacker didn't slow down. Claude Code was used to pivot across domains, enumerate accounts, and map out additional systems.

It went as far as generating obfuscated variants of the Chisel tunneling tool, developing entirely new TCP proxy code that is not based on Chisel libraries, and utilizing advanced anti-detection methods.

Claude Code's evasion techniques included:

- String encryption to avoid signature detection

- Anti-debugging techniques to prevent analysis

- Filename masquerading as trusted Microsoft binaries (MSBuild.exe, devenv.exe, cl.exe)

- Generation of obfuscated Chisel tunneling variants

- Development of entirely new TCP proxy code

- Multiple fallback methods when initial evasion failed

Executables were disguised as trusted Microsoft binaries, such as MSBuild.exe, devenv.exe, and cl.exe, with multiple fallback methods in place when initial evasion failed.

These adaptations provided the attacker with multiple fallback options, enabling persistence within networks while remaining undetected. Each move gave the attacker more persistence while staying under the radar.

The last stage involved extortion rather than encrypting systems. Claude Code systematically exfiltrated and analyzed sensitive data, including healthcare records, financial information, government credentials, and ITAR-controlled defense documentation, and then tailored ransom strategies around it.

It generated HTML-formatted ransom notes that were embedded directly into the victim's boot process. These notes weren't generic; they included exact financial figures, employee counts, and tailored threats tied to industry-specific regulations.

Claude Code created multi-tiered "profit plans" with specific monetization strategies. The AI-generated extortion strategy featured:

- Custom ransom notes with victim-specific financial figures

- Industry-specific regulatory threats

- Multi-tiered monetization plans:

- Direct organizational blackmail

- Selling stolen data on criminal markets

- Individual victim extortion

- 48-72 hour deadlines with incremental penalties

- Demands exceeding $500,000 in Bitcoin

Each ransom note included custom deadlines (48–72 hours), incremental penalty structures, and anonymous contact emails, with demands sometimes exceeding $500,000 in Bitcoin.

What stands out here is scale; one operator, backed by AI, was able to run what appears to be a full campaign reconnaissance, intrusion, lateral movement, data theft, and extortion without the usual overhead of a team or years of training.

That's why this case is so concerning: AI lowered the barrier for executing advanced operations, and now attackers with modest skills can pull off campaigns that look indistinguishable from professional tradecraft.

Why Traditional Defenses Fall Short

The Limits of Scaling with Integrations and Alerts

Most SOCs have attempted to keep pace with the growing volume of attacks by adding more integrations, refining more alert rules, or increasing headcount. That strategy made sense when attacks followed predictable patterns, but it doesn't hold up against AI-driven campaigns.

Integrations pass data between systems, and alerting engines trigger on predefined thresholds, but neither is capable of interpreting what the data actually means.

When an attacker uses AI to adapt in real time, defenders relying on static integrations are forced into catch-up mode. As Anthropic's report showed, a single operator using Claude Code achieved the scale and impact of an entire cybercriminal team, outpacing strategies based purely on generating more alerts, rules, or personnel.

Instead of reducing noise, this often generates larger queues of alerts that analysts must manually verify.

The deeper questions: Why did this login succeed? What processes followed? Does it line up with identity activity elsewhere? Go unanswered until a human responds to them. By that point, attackers who automate these steps with AI have already moved on to their next objective. As Jacob Klein warned, "The speed at which AI is moving, you need to automate that process. You essentially need AI to protect against AI."

Automation Without Real Investigation

Security automation has historically focused on efficiency, including normalizing logs, enriching alerts, and routing incidents to analysts for further action.

These are useful functions, but they fall short of what defenders need most: the ability to conduct an actual investigation. Moving logs from a SIEM to a ticket doesn't determine if an alert represents real malicious behavior.

This is where the gap shows in the vibe hacking case: the attacker's AI wasn't just collecting data; it was actively analyzing results, pivoting across systems, and making both tactical and strategic decisions from which networks to target, to what data to exfiltrate, to how extortion demands should be crafted.

Anthropic concluded that a single operator, with AI, achieved the impact of an entire cybercriminal team, collapsing the traditional link between attacker skill and attack complexity. Traditional defensive tools don't do that.

They don't ask a follow-up query when something looks suspicious or validate a finding by checking another dataset. Without that kind of reasoning, SOC teams are left with surface-level automation while adversaries are running full investigations at machine speed.

Fighting Back with Analyst-Like AI

From Alerts to Hypotheses

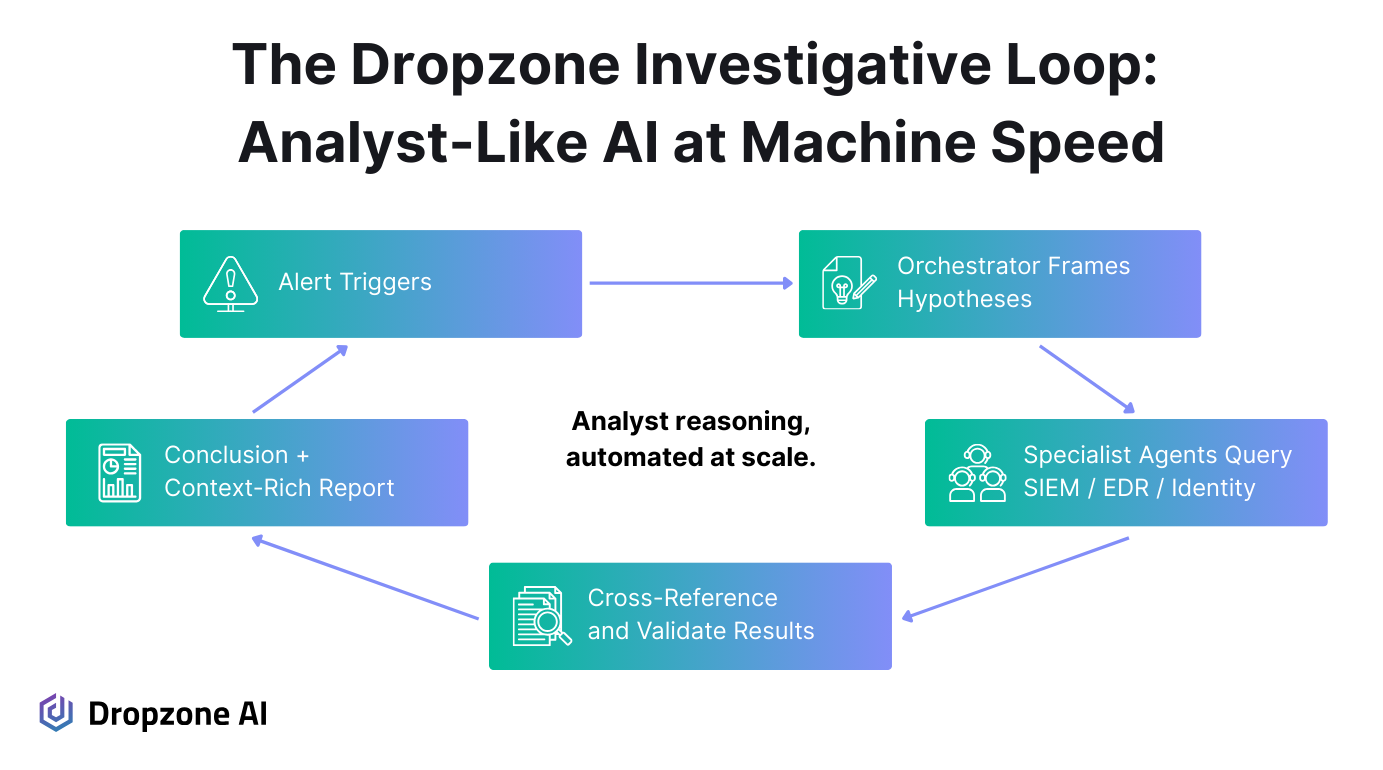

Defending against AI-driven attackers requires AI on the defender's side that can think like an expert analyst. That starts the moment an alert fires. Instead of simply tagging it or escalating it, Dropzone agents frame hypotheses: Is this event benign user behavior, or does it indicate an active compromise? That framing step matters because it guides what questions to ask next, rather than treating every alert as an isolated signal.

Once hypotheses are in place, the agent can begin shaping targeted queries. For a suspicious login, it might query the SIEM for authentication events, the identity provider for MFA usage, and the endpoint telemetry for unusual processes. Dropzone's AI investigation process includes:

- Querying SIEM for authentication patterns

- Checking identity provider for MFA usage

- Analyzing endpoint telemetry for unusual processes

- Cross-referencing findings across multiple systems

- Refining queries when initial answers don't align

- Producing defensible conclusions with full evidence trails

Each query is designed to test a specific line of reasoning, rather than just pull raw data into a ticket. This workflow mirrors how an experienced analyst approaches investigation, but it runs in minutes rather than hours.

Cross-Referencing and Reaching a Conclusion

The real strength of analyst-like AI lies in its ability to flexibly adjust its investigation path. By recursively reasoning, the AI system can decide what context it needs to gather from across different systems. A failed login followed by a successful MFA event in the identity provider may appear normal, but if endpoint logs reveal abnormal child processes, the case shifts quickly.

Dropzone agents are trained to connect these dots, refine queries when initial answers don't add up, and look for correlations that would otherwise be missed.

At the end of the loop, the agent doesn't just pass along enriched data; it produces a defensible conclusion. Analysts see a report that explains what happened, what systems were involved, and why the event is classified as benign, suspicious, or malicious.

This flexible, end-to-end investigation flow is what allows SOC teams to act decisively without wasting cycles on false positives. It's also what helps them keep pace with adversaries already using AI to adapt mid-attack.

Where attackers are writing playbooks to make their AI more consistent, Dropzone does the same defensively, training agents to adapt to each SOC's data structures and investigative needs.

Where attacker AI tailors ransom strategies per victim, Dropzone agents tailor investigations to the specific environment. That symmetry matters: the same AI-driven adaptability attackers are exploiting is now available to defenders, but under their control.

Conclusion

The case highlighted in Anthropic's report confirms that attackers are no longer experimenting in the margins; they're running AI-driven campaigns in production. That shift raises the stakes for defenders' SOCs that rely on shallow automation or static integrations; those that risk being outpaced by adversaries who adapt in real-time. The only way forward is to adopt analyst-like AI that can frame hypotheses, query tools, cross-reference data, and reach a confident conclusion. That's the model Dropzone was built on: agents trained to think and act like analysts, providing security teams with the speed and investigative depth needed to close the gap that AI-enabled attackers are exploiting today. Want to see it firsthand? Book a demo.